Google just introduced Gemini 2.0, its most ambitious AI model yet, with a bold announcement by its CEO Sundar Pichai on X and its official blog. Pichai claims that this isn’t just another upgrade—it marks the beginning of the agentic era, where AI doesn’t just assist but acts, taking initiative to think, plan, and solve problems.

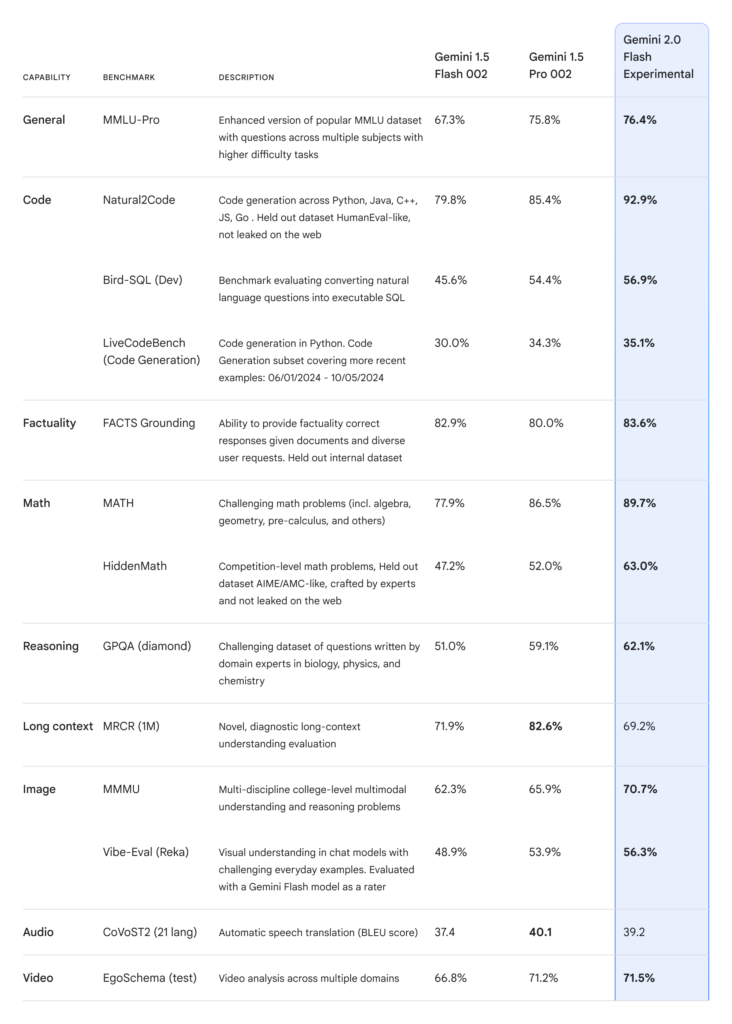

We’re kicking off the start of our Gemini 2.0 era with Gemini 2.0 Flash, which outperforms 1.5 Pro on key benchmarks at 2X speed (see chart below). I’m especially excited to see the fast progress on coding, with more to come.

— Sundar Pichai (@sundarpichai) December 11, 2024

Developers can try an experimental version in AI… pic.twitter.com/iEAV8dzkaW

At the center of this release is Gemini 2.0 Flash, a faster, more efficient version built for real-world tasks. But Google didn’t stop there. It teased projects like Project Astra a Project Mariner, offering a sneak peek at what’s coming: AI that can navigate web browsers, adapt to your needs, and even help you find everyday things like your glasses.

This isn’t just another step forward—it’s a glimpse into a future where AI becomes proactive, solving problems and changing how we live, work, and interact with technology.

What Makes Gemini 2.0 Special?

Gemini 2.0 isn’t just faster; it’s built to tackle more complex problems with better accuracy and speed. Google claims it’s twice as fast as its predecessor, Gemini 1.5 Pro, without cutting corners on performance. That’s a massive win for developers and businesses who need reliable AI at scale.

Beyond text, Gemini 2.0 is now fully multimodal—it can handle inputs and outputs in text, images, and even audio. Need a voice in multiple languages? Gemini’s got you. Want an AI to generate an image alongside text? That’s here too. Plus, it introduces real-time features through its Multimodal Live API, opening doors to new applications that demand immediate responses.

A new feature called Deep Research turns Gemini 2.0 into a research powerhouse. It can analyze complex topics, compile reports, and break down detailed information in ways that make it invaluable for businesses and students alike.

Gemini 2.0 Flash

The first release of Gemini 2.0, Flash, is built with developers in mind. It’s optimized for speed and efficiency, delivering results twice as fast as Gemini 1.5 Pro. Google says it even outperforms competitors in benchmarks like SQuAD 1.1 for question answering, scoring 92.5 EM and 95.5 F1—a significant jump over the original Gemini.

What makes Flash even more impressive is its versatility. It can execute code, integrate with tools like Google Search, and handle third-party functions. That means developers can build smarter, faster applications that respond to real-world tasks with almost zero latency.

Starting today, developers can access Gemini 2.0 Flash via Google AI Studio and Vertex AI. Early-access partners will also get hands-on with advanced features like native image generation and multilingual text-to-speech, with a wider rollout planned for January 2025.

AI Agents: Google’s Vision for the Future

Google isn’t stopping with just a faster model. Gemini 2.0 powers a new generation of AI agents designed to work independently and help you get things done.

Project Astra

Project Astra is Google’s prototype for a universal AI assistant. It doesn’t just answer questions—it remembers details, uses tools like Google Search and Maps, and interacts naturally in multiple languages.

Powered by Gemini 2.0, Astra now handles real-time audio and video, giving it human-like responsiveness. It’s being tested on Android devices and even experimental smart glasses. Imagine walking down the street, asking where you left your car keys, and your AI assistant actually answering you.

Project Mariner

Mariner is Google’s early attempt at teaching AI to control your browser. It can read, scroll, click, and fill out forms for you. While still experimental, it achieved an 83.5% accuracy score on WebVoyager—a benchmark for real-world web tasks.

Mariner could one day handle online shopping, research, or even work tasks. Think about it—an AI that fills out tedious forms so you don’t have to.

Project Jules

For developers, Google introduced Jules, an AI coding assistant that integrates with GitHub. Jules can analyze issues, plan fixes, and execute solutions under your supervision. It’s Google’s first real push toward specialized AI agents designed for complex, technical tasks.

AI in Gaming, Robotics, and Everywhere Else

Google’s Gemini 2.0 isn’t stopping at smarter search or coding—it’s pushing into gaming, robotics, and every corner of Google’s ecosystem.

In gaming, AI agents powered by Gemini 2.0 can analyze what’s happening on-screen and offer real-time suggestions to players. Google is already testing this with developers like Supercell, the creators of Clash of Clans. Picture an AI coach guiding you to victory, analyzing your gameplay, and strategizing alongside you.

For robotics, Gemini 2.0’s spatial reasoning skills are being explored to help machines navigate and interact with the physical world. While still in its early stages, the potential is huge: robots that assist with real-world tasks, from organizing warehouses to aiding in everyday household chores.

But Google’s ambitions for Gemini 2.0 don’t stop there. The model is set to power everything—from Search to Workspace to the Gemini app. AI Overviews in Search, already used by over a billion people, will become even more powerful, tackling multi-step math problems, coding tasks, and complex queries effortlessly.

This evolution isn’t just about answering questions anymore. It’s about creating AI that solves problems, takes action, and works alongside you—whether on a screen, in the physical world, or seamlessly across Google’s entire ecosystem.

Gemini 2.0 is laying the groundwork for a future where AI doesn’t just assist—it acts, adapts, and becomes an indispensable part of how we live, work, and play.

2025: The Year of Autonomous AI Agents

Google’s Gemini 2.0 is setting the foundation for a future where AI doesn’t just assist—it acts independently. Sundar Pichai, Google’s CEO, captured this shift perfectly: “If Gemini 1.0 was about organizing and understanding information, Gemini 2.0 is about making it much more useful.”

This isn’t just incremental progress; it’s the dawn of autonomous AI agents that think, decide, and take initiative. Imagine research that not only finds answers but synthesizes insights and drafts conclusions. Coding assistants that don’t stop at suggesting snippets but build entire systems on their own. Personal AI tools that anticipate your needs—booking meetings, managing projects, and even troubleshooting before you know there’s a problem. Gaming agents that analyze your gameplay in real time, coaching you like a human mentor.

The AI we’ve been waiting for isn’t just responsive anymore. It’s proactive, adaptive, and capable of acting with purpose. By 2025, the vision of AI as an autonomous agent will no longer be science fiction—it will be reality.

Developers are getting early access to Gemini 2.0 now, but the broader rollout in 2025 will be a turning point. Whether in work, education, entertainment, or everyday life, autonomous AI agents will take center stage, reshaping how we interact with technology and the world.

2025 won’t just be another year in AI—it will mark the beginning of a future where machines do more than help us. They’ll work for us, alongside us, and sometimes, ahead of us.