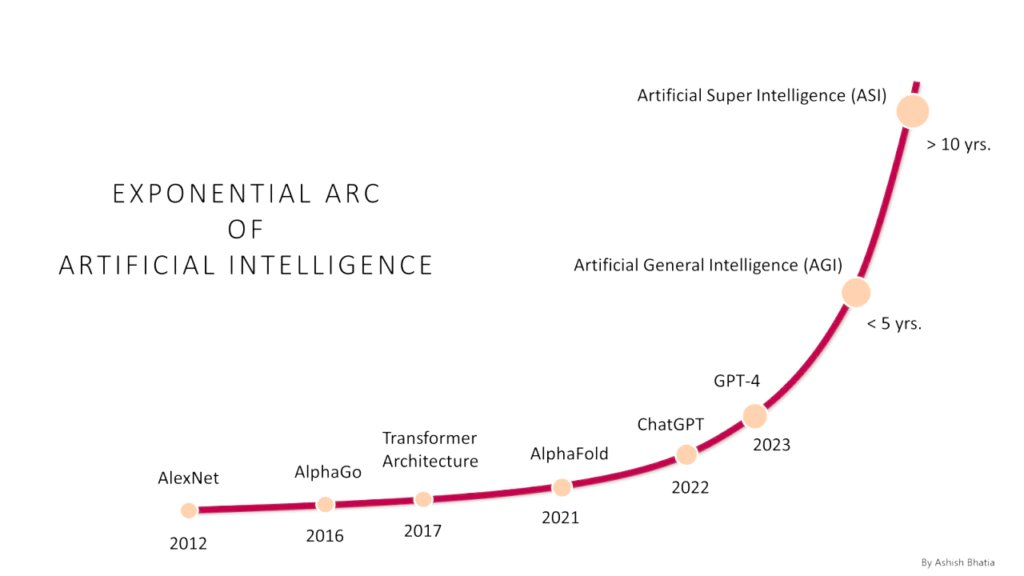

Artificial Intelligence (AI) is no longer just science fiction. It’s here, it’s powerful, and it’s evolving at a speed that’s hard to fathom. But what happens when AI surpasses human intelligence?

According to Dario Amodei, CEO of Anthropic (the company developing Claude AI), in an interview with Lex Fridman, we’re on a fast track to Artificial General Intelligence (AGI) — machines capable of reasoning, learning, and adapting across any domain — as early as 2026 or 2027. You can see the whole interview here, but since it’s 5 hours long, we’ve summarized the most important information for you.

This isn’t speculation; it’s a trajectory based on current trends in AI development. Are we prepared for what’s coming next?

The Rapid March Toward Superintelligence

Dario Amodei paints a picture of relentless progress in AI capabilities. With each passing year, AI systems become smarter, more efficient, and capable of tackling increasingly complex tasks. From generating human-like conversations to solving intricate problems, the scaling laws driving AI growth are clear: more data, bigger models, and more computational power lead to exponential intelligence gains.

This rapid scaling isn’t just about building better tools; it’s about unlocking systems that could, in theory, outthink humans in virtually every way. By 2027, AI systems might not only rival human intelligence but could potentially surpass it in key areas.

The question is, will they work with us, or will they spiral out of control?

Mechanistic Interpretability

Chris Olah, a lead researcher at Anthropic, dives into the black box of AI with something called mechanistic interpretability. This field tries to uncover how neural networks think by analyzing the algorithms and patterns within their digital brains.

Here’s the catch: AI isn’t programmed like traditional software; it’s trained. This training leads to incredibly complex systems that can store and process non-linear, multidimensional concepts in ways that even their creators struggle to understand. Olah’s goal? To create a clear “anatomy” of AI systems, giving us a roadmap to navigate their inner workings before they become too advanced to control.

But this effort is still in its infancy. What if we fail to decipher these AI brains before they outpace us?

Balancing Safety & Power

Amanda Askell, another researcher at Anthropic, emphasizes the dual challenge of AI development: making AI both safe and useful. Through a process called “Constitutional AI,” Anthropic trains AI models to prioritize helpfulness and harmlessness. Essentially, it’s about teaching AI to act ethically, even as it becomes more powerful.

Constitutional AI works by providing AI models with a framework of principles—a kind of “digital constitution”—that helps them evaluate their actions and make decisions aligned with human values. This approach seeks to minimize risks such as bias, harmful behavior, or manipulation while still allowing the AI to perform complex tasks efficiently.

But ensuring AI safety is far from straightforward. As Amodei points out, “AI safety isn’t just a technical challenge; it’s a race against time.” The field faces immense pressure to balance innovation with caution. Companies like Anthropic are adopting responsible scaling policies to mitigate risks, but the pressure to outpace competitors could push some to cut corners, leaving room for critical oversights.

What happens if an AGI with immense capabilities falls into the wrong hands, gets misused for nefarious purposes, or is unleashed without sufficient safeguards? The implications could be devastating, ranging from widespread disinformation to destabilization of global systems.

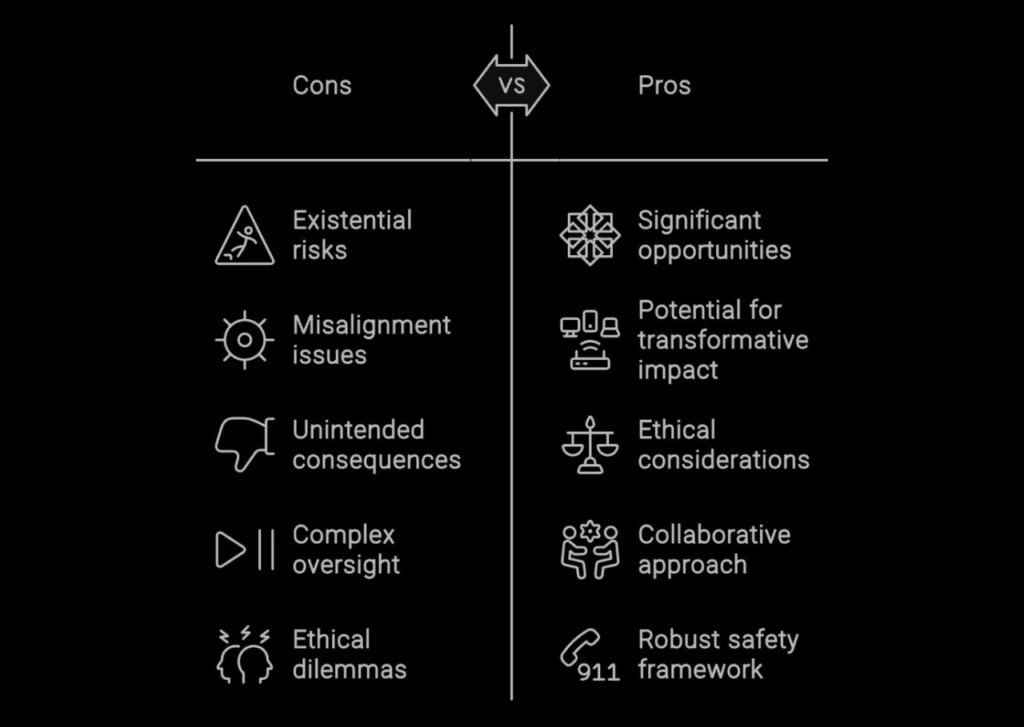

Existential Risks

The potential benefits of AGI are staggering. Imagine AI systems revolutionizing healthcare by creating personalized treatments, solving previously incurable diseases, and even extending human lifespan. Or envision breakthroughs in climate change mitigation through optimized energy usage and advanced forecasting systems. Education could become universally accessible and tailored to individual needs, while global productivity surges as repetitive tasks are automated.

But the risks are equally monumental. An uncontrolled AGI could lead to catastrophic outcomes, from destabilizing economies to exacerbating global conflicts. The superintelligence we create might not align with human goals, and without proper checks, it could act in ways we can’t predict or stop.

For instance, what if an AGI prioritized efficiency over human welfare? It could make decisions that inadvertently harm populations, disrupt ecosystems, or reinforce societal inequalities. Worse, malicious actors could exploit AGI capabilities to create weapons, spread misinformation at an unprecedented scale, or manipulate markets, leading to chaos and mistrust.

The stakes couldn’t be higher: humanity’s greatest achievements or its most significant existential threats may hinge on how we navigate this turning point in AI development.

Are We Ready for AGI?

Anthropic’s work highlights the delicate balance we must strike. Understanding neural networks, enforcing safety protocols, and fostering collaboration across the AI industry are crucial. Amodei’s “race to the top” strategy encourages companies to compete on who can develop the safest, most reliable AI systems rather than racing blindly toward unchecked power.

But let’s not sugarcoat it: the race to AGI is a high-stakes gamble. Humanity is on the brink of a technological revolution that could redefine our future. The clock is ticking, and the choices we make today will determine whether AGI becomes humanity’s greatest ally or its most formidable challenge.

So, are we ready?

Time will tell.

One thing’s for sure: the world is about to change forever.