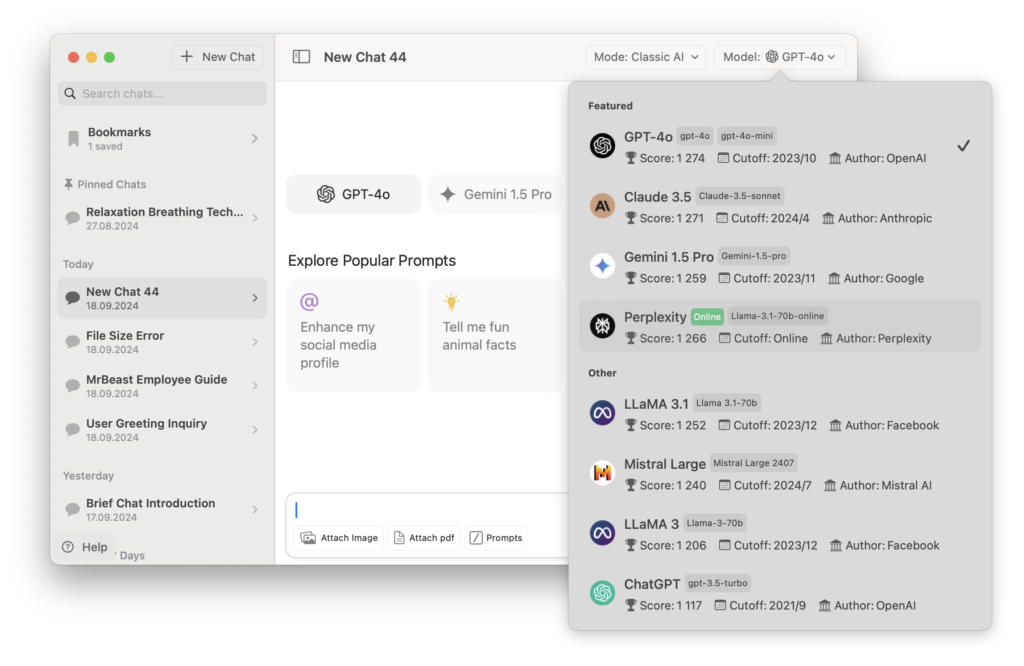

I’m excited to announce Fello AI version 4.4.0, with macOS 15.0 Sequoia optimization, faster response times, and improved model performance. On top of that, you’ll also find two new flagship models: Perplexität Online und Mistral Large 2. Let’s take a look at what’s new one by one:

Introducing Perplexity Online: AI, Always Up to Date

One of the key highlights in this release is Perplexität Online, a model designed to provide real-time, constantly updated information.

But what exactly is Perplexity AI?

Perplexity AI is a conversational search engine that uses large language models to provide direct, real-time answers to user questions, with cited sources for verification.

It aims to streamline search by reducing the need to sift through links. Recently, Perplexity raised funding and launched a U.S. election hub, though it has faced scrutiny over its data collection practices.

Key Features of Perplexity Online:

- Real-Time Accuracy: Unlike static models, Perplexity Online is connected to live data sources, constantly updating to reflect the latest facts and trends.

- Built on Llama 3.1: Powered by the highly efficient Llama 3.1 framework, it delivers accurate, up-to-date responses across a wide range of topics.

- Ideal for Knowledge-Intensive Queries: From current events to in-depth research, Perplexity Online keeps your answers relevant and timely.

Mistral Large 2: Power Meets Precision

We’ve also added Mistral Large 2, a flagship model built for handling complex queries with precision and speed.

Why Choose Mistral Large 2?

- State-of-the-Art Performance: Mistral Large 2 efficiently manages large datasets, delivering sophisticated outputs without slowing down.

- Versatility: Perfect for in-depth analysis, problem-solving, and creative tasks, Mistral Large 2 adapts to both technical and creative use cases.

- Energy Efficiency: Despite its power, Mistral Large 2 is optimized to use fewer resources, making it a perfect fit for macOS 15.0 Sequoia’s energy-efficient design.

Other Updates

Optimized for macOS 15.0 Sequoia

Fello AI has been optimized to work flawlessly under the latest macOS release Sequoia. Both performance and design-wise. Expect faster load times, seamless multitasking, and a more responsive UI that fully integrates with Sequoia’s enhanced resource management.

Faster Responses Across the Board

I’ve reduced latency for GPT-4o, Zwilling 1.5 Pround Claude 3.5 Sonett. You’ll notice quicker replies, whether you’re solving problems or just having casual conversations.