Why Use Llama 4 as a Desktop App?

Llama 4 is Meta’s newest open-source AI model—and it’s a game-changer. Unlike proprietary models like GPT-4o or Gemini 2.5, Llama 4 is freely available to developers and researchers. That means no paywalls, no vendor lock-in, and full control over how you use and deploy the model.

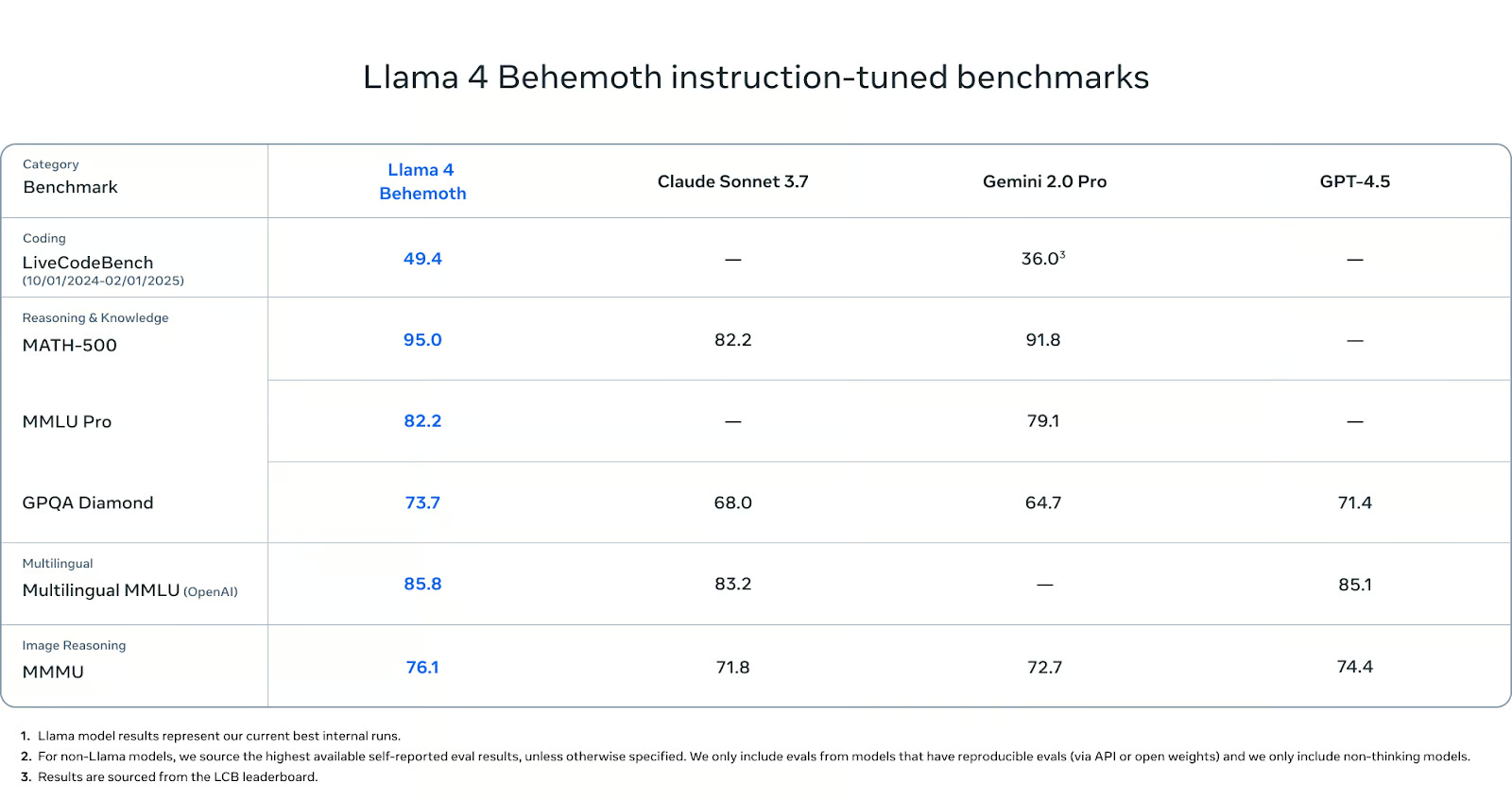

Meta released Llama 4 in multiple versions—Scout and Maverick are already production-ready, while Behemoth is still in training and expected to rival even the most powerful models from OpenAI and Anthropic. These models deliver top-tier performance, massive context windows (up to 10M tokens)und native multimodal support—all while staying cost-efficient.

Using Llama 4 via a desktop app gives you direct, low-latency access to the latest AI capabilities—right from your Mac.

How to Access & Use Llama 4 on Your Mac

Option 1: Chat with Meta AI

You can use Llama 4 right now—without downloading or installing anything—through Meta’s official AI assistant:

- WhatsApp

- Messenger

- Instagram DMs

Or on the web at Meta.ai

This is the fastest way to explore Llama 4’s capabilities. Whether you’re asking questions, testing reasoning, or just playing around, it’s a great way to experience the model casually and in real-time.

Option 2: Use Llama 4 as a Progressive Web App

Prefer the browser-based version? You can also turn the Llama model interface into a desktop shortcut:

- Open Safari and go to the Llama 4 page or the Hugging Face playground.

- Click “Share” → “Add to Home Screen.”

- Launch Llama 4 right from your dock—without needing to open a full browser window.

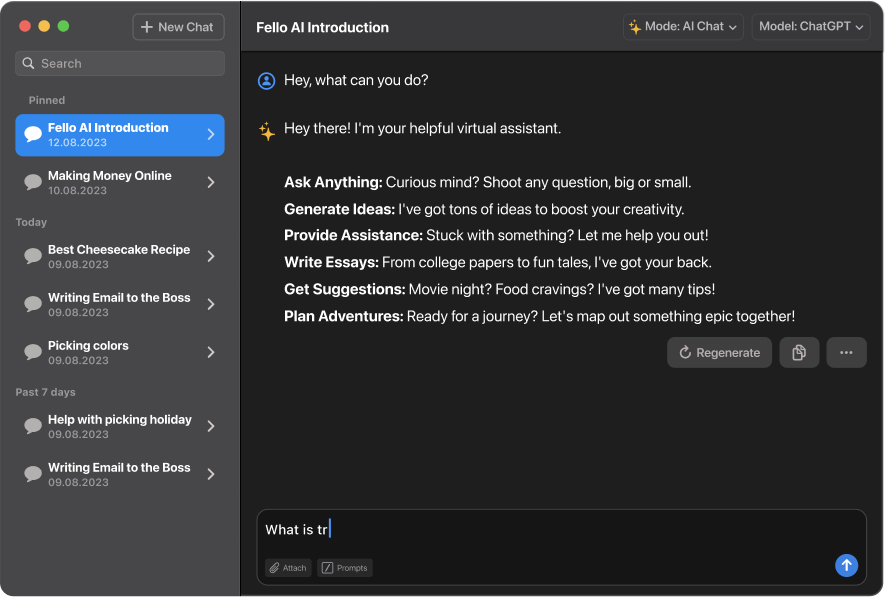

Option 3: Use Fello AI

The easiest way to run Llama 4 models on your Mac is through Fello AI, a native macOS app that brings together all major AI models—including Llama 4.

Why Fello AI?

- ✅ No login or setup required

- 🚀 Instant access to Llama 4

- 🧠 Integrated with models from Meta, OpenAI, Google, DeepSeek, …

- 🖼️ Supports multimodal input (text + image)

Steps to Install:

- Download Fello AI from the Mac App Store.

- Open the app—no account needed.

- Select Llama 4 from the model list.

- Start chatting, summarizing, analyzing documents, and more.

Perfect for researchers, developers, and productivity-focused users who want best-in-class AI tools in a single desktop environment.

Option 4: Use Llama 4 Open Weights

If you’re a developer or researcher, you can download Llama 4 Scout und Maverick from:

Both models come with open weights—so you can:

- Fine-tune them for your use case

- Deploy locally or in the cloud

- Run Scout on a single H100 GPU

- Use Maverick for high-throughput applications

There’s no waitlist or special access required. Just grab and build.

Llama 4 vs Other AI Models

In 2025, choosing the right AI model isn’t just about access—it’s about performance across real-world tasks like reasoning, multimodal inputs, long documents, and code. Meta’s Llama 4 lineup now enters the top tier, standing shoulder-to-shoulder with proprietary models from OpenAI, Google, Anthropic, and DeepSeek. Here’s how it compares.

Llama 4 vs GPT-4o (OpenAI)

Performance

Llama 4 Maverick demonstrates performance that rivals GPT-4o in both reasoning and multimodal benchmarks. In LMArena, Maverick scored 1417 ELO, putting it near the top of the leaderboard among chat-focused assistants. While GPT-4o is known for its natural conversation style and strong formatting control, Maverick delivers comparable output quality on challenging tasks like multi-hop reasoning, structured generation, and multilingual question answering.

Benchmark results show that Maverick performs strongly in logic and math-based tasks as well, often matching GPT-4o’s output in content quality and relevance. This is especially notable given that Maverick activates only 17 billion parameters at a time, thanks to its 128-expert MoE architecture—an efficient design that delivers high capability without relying on massive active parameter counts.

Llama 4 vs Gemini 2.5 Pro (Google)

Performance

Gemini 2.5 Pro is currently Google’s most advanced multimodal model, capable of processing text, images, audio, and video. However, Llama 4’s native early-fusion architecture allows it to treat text and visual tokens as a single stream, yielding improved alignment and more precise understanding when working across modalities. This shows up in tasks like explaining annotated graphs, combining visual elements with transcripts, or parsing forms with embedded visuals—where Llama 4 produces tightly grounded, unified responses.

Llama 4 Scout also redefines context length standards. With a 10 million token window, it enables large-scale reasoning across books, technical manuals, or chat histories, far exceeding Gemini’s 1 million tokens. On long-context QA and summarization tasks, Scout delivers higher consistency and recall than Gemini 2.0 Flash-Lite. Combined with its image+text processing and low latency, it becomes a powerful choice for real-world document and knowledge workflows.

Llama 4 vs Claude 4 (Anthropic)

Performance

Claude’s strength lies in conversational clarity, safety, and long-memory workflows. Yet Llama 4 Maverick matches or exceeds it on technical and logic-intensive benchmarks. Distilled from the unreleased Llama 4 Behemoth, Maverick inherits powerful reasoning abilities—showing top-tier performance on STEM benchmarks like MATH-500, GSM8Kund GPQA Diamond.

Claude’s 200K token context is impressive, but Llama 4 Scout’s 10M token window sets a new bar, allowing full ingestion of multi-document research, long contracts, or serialized conversations in a single input. Maverick also holds its own in extended conversations and coding tasks, particularly when mixed with image-based inputs or when asked to reason step-by-step without tool use. In internal testing, it proved especially strong in knowledge synthesis and scientific reasoning.

Llama 4 vs DeepSeek R1

Performance

DeepSeek R1 is one of the strongest open models to date, offering strong chain-of-thought reasoning and JSON-native output, built on a 685B parameter base with sparse activation. It’s particularly good in structured outputs, math tasks, and retrieval-based augmentation scenarios.

Llama 4 Maverick, though smaller in parameter count, performs comparably on complex reasoning and coding tasks. In coding-specific evaluations like HumanEval und MBPP, it demonstrates structured, coherent code generation with minimal hallucination. Maverick also shows stronger multimodal capabilities out of the box—thanks to early fusion—while DeepSeek R1 still leans more toward text-first interaction.

Moreover, Llama 4 models benefit from extensive distillation from Llama 4 Behemoth, making their reasoning performance dense and stable even at lower token budgets. While DeepSeek R1 emphasizes raw model size, Llama 4 emphasizes efficiency and modularity, with Scout performing well even on single-GPU setups while maintaining high-quality reasoning output.