On June 18, 2025, AI art platform Midjourney officially entered the AI video generation space with the debut of its first video model, V1. The release introduces an image-to-video (I2V) workflow that transforms still images—either generated within Midjourney or uploaded by users—into short animated clips. Unlike OpenAI’s Sora or Google’s Veo 3, which generate video directly from text prompts, V1 is rooted in Midjourney’s visual-first philosophy.

This transition represents a natural evolution for the platform, which has spent years developing one of the most popular and stylized image generators in the AI space. With V1, Midjourney focuses on extending its aesthetic strengths into motion rather than competing directly on realism or video length. The model animates imagery with a strong artistic bias, echoing the painterly, surreal qualities that define its image outputs.

Introducing our V1 Video Model. It's fun, easy, and beautiful. Available at 10$/month, it's the first video model for *everyone* and it's available now. pic.twitter.com/iBm0KAN8uy

— Midjourney (@midjourney) June 18, 2025

With over 20 million users and a dominant presence in the creative AI community, Midjourney’s leap into video feels inevitable. However, V1 is more than a feature addition—it’s the foundation for a broader strategy that includes interactive environments, 3D modeling, and real-time rendering. This first step sets the tone for Midjourney’s long-term ambitions in immersive media creation.

How V1 Works

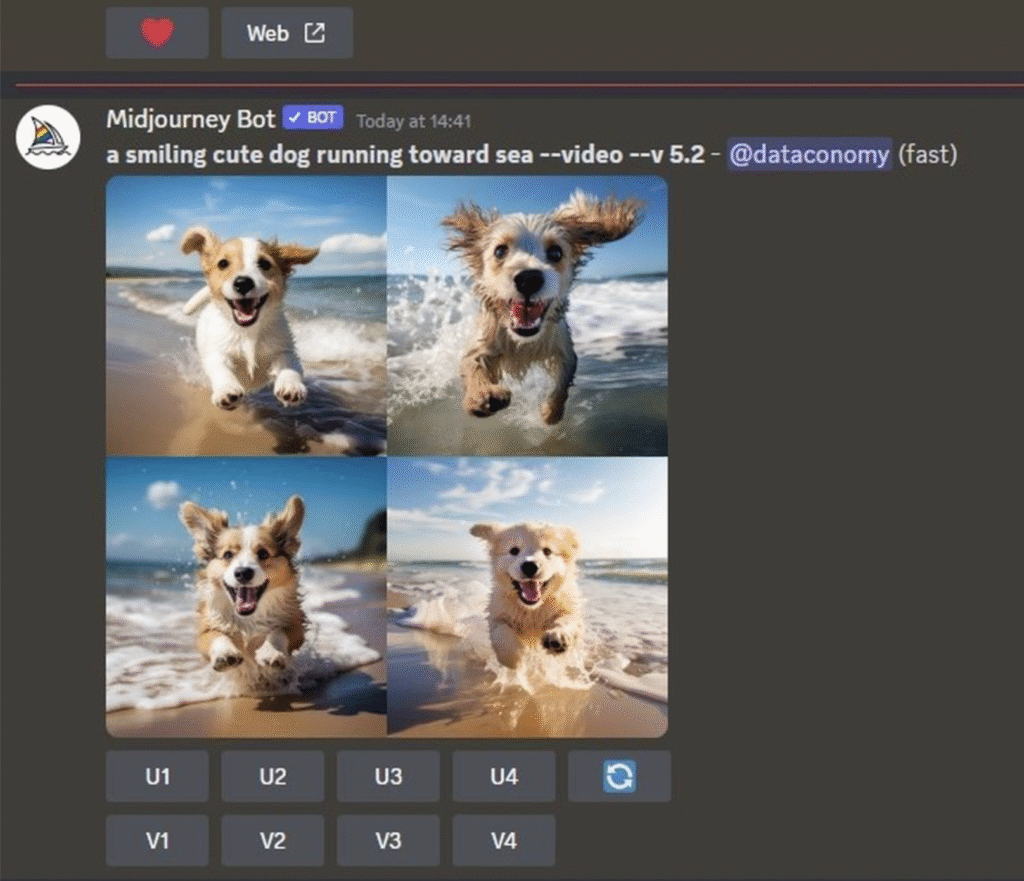

Midjourney’s V1 model is not a text-to-video engine. Instead, it specializes in animating static images—either generated inside the platform (versions V4–V7 and Niji) or uploaded by the user.

Each video generation yields four variations, each around 5 seconds long (≈125 frames at 24fps). The resolution is fixed at 480p, and currently there’s no support for HD or upscaling, although a medium-quality option is planned.

Videos can be extended in 4-second increments up to a total of 21 seconds. This enables users to chain prompts or continue an existing animation with consistent motion.

Key features:

- Motion types: “Low Motion” (ambient) vs. “High Motion” (dynamic)

- Prompting options: Automatic motion generation or manual prompt control

- Scrubbable preview: All four variations play in one panel with hover-preview

This familiar image-first approach makes the animation process intuitive for long-time Midjourney users.

Pricing and Access

Midjourney V1 is available on the Midjourney website and is not yet supported in Discord. All paid users can access the feature, starting at $10/month (Basic plan).

Here’s the pricing breakdown:

- Basic Plan ($10/month): ~3.3 hours of fast GPU time (~200 images)

- Pro Plan ($60/month): Unlimited relaxed mode + priority rendering

- Mega Plan ($120/month): Highest limits and Relax mode for video

Video jobs consume ~8x more GPU resources than image jobs. This translates to one image worth of GPU cost per second of video. A 5-second clip equals five image generations.

“Relax” mode—where jobs render slowly but cost less—is available only to Pro and Mega subscribers. This helps creators experiment affordably with multiple outputs.

Midjourney V1 vs Veo 3 vs Sora

Let’s break down how Midjourney’s V1 compares to the leading AI video generation platforms: Google’s Veo 3 and OpenAI’s Sora. These three models vary significantly in purpose, feature depth, and accessibility, serving different creative and commercial goals.

| Feature | Midjourney V1 | Google Veo 3 | OpenAI Sora |

|---|---|---|---|

| Type | Image-to-video | Text-to-video | Text-to-video |

| Max Length | 21 seconds | 8 seconds | 20 seconds (Pro), 5 (Plus) |

| Resolution | 480p | 4K | 1080p (Pro) / 720p (Plus) |

| Audio Support | ❌ No | ✅ Yes (native audio) | ❌ No |

| Prompt Style | Manual / Auto (image) | Text prompts with motion | Complex text & scene logic |

| Native Editing Tools | ❌ None | ✅ Timeline & visual edits | ✅ Scene chaining |

| Pricing (entry level) | $10/month | $19.99/month (Pro tier) | $20/month (Plus) |

| Target Users | Artists, hobbyists | Filmmakers, advertisers | Educators, prototypers |

| Unique Strength | Stylized animation | Cinematic realism + audio | Physics-driven realism |

Creative Use Cases Compared

- Midjourney V1 is best for users who already rely on Midjourney’s visual engine and want to animate existing images. Its surreal style and simplicity make it ideal for short-form artistic content, social media experiments, and visual storytelling that doesn’t rely on realism.

- Google Veo 3, developed by DeepMind, stands out for its cinematic polish and sound integration. With support for complex camera moves and realistic audio environments, it’s tailored for marketing videos, ads, and production-grade visuals. However, it’s limited to 8-second clips and has a high price barrier.

- OpenAI Sora focuses on realism, physics accuracy, and longer narrative potential. Though it lacks native audio, it supports more advanced scene transitions and movement dynamics. It’s ideal for educational content, simulations, and research where story consistency matters more than visual beauty.

Accessibility and Workflow

Sora and Veo are both text-prompt-driven. This means users must be specific in describing scenes and motion to get useful results. Midjourney, in contrast, simplifies the process with its image-first workflow—users start with an image and then animate, using either default or custom motion prompts.

Midjourney is also the most affordable option by a wide margin. A $10 subscription grants access to all image and video features (though limited by GPU minutes). Veo requires access to Google Ultra plans, and Sora’s Pro plan—needed for full-length videos—costs $200/month.

In sum, Midjourney offers creativity and accessibility, Veo delivers professional cinematic tools, and Sora provides dynamic realism. The right choice depends on your needs: artistic animation, commercial video, or interactive storytelling.

Midjourney explore page looking pretty cool right now pic.twitter.com/ljHTMpyHEA

— Midjourney (@midjourney) June 19, 2025

Style Over Realism

Rather than chasing hyperrealistic output like many of its rivals, Midjourney V1 leans fully into stylization. The animations it generates carry over Midjourney’s distinctive artistic traits—rich color palettes, painterly strokes, abstract elements, and surreal motion. The model doesn’t try to simulate real-world physics or camera optics. Instead, it produces motion that feels imaginative and expressive. For creators who value mood and creativity over photorealism, this sets V1 apart.

This aesthetic-first design makes V1 ideal for:

- Artistic short films and animated loops

- Music visualizations and ambient backgrounds

- Stylized storyboards and visual explorations

- Social content with a unique or whimsical touch

The model is not without technical quirks. In high-motion settings, users may encounter some flickering or warping, but Midjourney has tuned the system to retain consistent character form and scene coherence across frames. These visuals feel more like moving paintings than conventional animation, and that’s exactly the appeal for many in the creative community.

A key part of V1’s usability is its integrated four-panel preview system. Each animation prompt generates four video variations, which users can hover over, scrub through, pause, and extend directly in the interface. This functionality not only simplifies the evaluation process but also speeds up creative iteration—especially for users already familiar with Midjourney’s image UX. It’s a small feature, but one that reflects the company’s consistent focus on intuitive, visual-first tooling.

Midjourney’s Bigger Vision

Midjourney has made it clear that V1 is only a foundational step toward a much larger ambition: building AI models capable of powering real-time, open-world simulations. In the company’s own words, the ultimate goal is to create systems where users can generate, animate, and interact with environments, characters, and motion in three-dimensional space—all in real time.

To achieve this vision, Midjourney is constructing its capabilities brick by brick. The image models (such as V6 and V7) laid the groundwork by enabling rich, stylized still visuals. The release of V1 adds the motion layer—letting those images move, evolve, and unfold into mini-scenes. But the roadmap doesn’t stop there. The team now turns its attention to:

- 3D modeling: creating spatial depth and volumetric understanding

- Navigation: enabling camera and user-controlled movement within generated scenes

- Real-time responsiveness: processing prompts and actions instantly, like in video games

In their official announcement, Midjourney described how this will unfold over the next year. Each major component will be released as a standalone feature—first the visuals, then the motion, then the 3D space, and eventually real-time simulation. These will be unified into a single system, with future iterations potentially supporting immersive environments like virtual reality or interactive filmmaking.

At launch, the V1 Video Model is considered a technical stepping stone: a fun, accessible, and affordable animation feature that lets users experiment creatively. The current toolchain includes ‘automatic’ animation, which generates a generic motion prompt, and a ‘manual’ option where users can define how a scene should animate. Users can toggle between ‘low motion’ (slower, more ambient movement) and ‘high motion’ (camera and subject movement combined), and extend clips in 4-second increments up to a total of 21 seconds.

What’s especially notable is that Midjourney now supports animating uploaded images—meaning this isn’t limited to content generated inside the platform. Any user can drag an external image into the prompt bar, set it as a ‘start frame’, and apply motion prompts to animate it. This opens up creative potential beyond the Midjourney community and could pave the way for broader use in design, advertising, and media production.

As these tools evolve, Midjourney envisions a future where users can enter prompts like “explore a neon-lit jungle at dusk” and receive a navigable, responsive digital environment generated on the fly. The release of V1 is just the first step on that journey.. These include basic 3D features, real-time video rendering, and eventually sound integration.

Reflexiones finales

Midjourney V1 isn’t trying to match Veo 3 or Sora in photorealism or narrative sophistication. Instead, it thrives in a space all its own—delivering fast, visually rich animations grounded in Midjourney’s signature artistic aesthetic. It’s not built for cinematic trailers or technical simulations, but rather for imaginative expression and creative experimentation.

Its real strength lies in the effortless integration into Midjourney’s existing ecosystem. Users already familiar with its image-generation tools can adopt V1 without learning a new interface or process. The model’s low barrier to entry—$10 per month—makes it far more accessible than its competitors, particularly for artists and hobbyists who want to bring visuals to life without steep learning curves or costs.

For creators seeking a quick and stylized way to animate images, V1 hits a sweet spot. It’s flexible, easy to iterate with, and built for visual storytelling. While it may not check every box for video professionals, it opens up animation to millions of users who would otherwise never try it. Midjourney’s V1 is not just a feature—it’s a signal that the company is steadily building toward a more immersive, interactive creative future.