AI tools like ChatGPT, Claude, and Gemini are incredibly powerful, but the reality is that most people aren’t using them correctly. In my experience supporting users of Fello AI, I’ve seen this firsthand.

Many people often reach out, frustrated, saying the AI isn’t working. They share with me examples of the questions they asked, expecting the AI to deliver something extraordinary. But here’s the problem: they’re asking things that, by the nature of LLMs, it simply can’t handle.

All these popular AI tools are built on technology called Large Language Models (LLMs), which are designed to understand and generate text. Unfortunately, LLMs aren’t perfect. They don’t actually “understand” the world the way humans do. Instead, they process patterns in text, which means they can sometimes give answers that sound convincing but are actually incorrect or nonsensical.

This guide is here to help you understand how to use these tools effectively, so you can get the most out of them.

1/ Understand How Large Language Models Work

LLMs like ChatGPT, Claude or Gemini are powered by neural networks. These networks are trained on vast amounts of text from the internet. The training process involves feeding the AI billions of sentences and phrases.

LLMs then learns to recognize patterns in how words and sentences are structured. This is why they can generate text that sounds natural and human-like. There is no magical sci-fi Artificial Inteligence in the background.

It’s crucial to understand that LLMs don’t have real knowledge or understanding. They don’t “think” or “know” things in the way humans do. Instead, they predict what comes next in a sentence based on the patterns they’ve learned.

For example, if you ask an LLM about the capital of France, it will somehow put “Paris” in its response because it has seen that pattern (France – Paris) repeated many times in its training data.

This is why it’s important to double-check anything an AI tells you. LLMs can produce errors or even invent information that seems true but isn’t. Always verify the facts, especially if accuracy matters.

But here’s where it gets tricky. If the AI encounters a question it doesn’t know the answer to, or if the information isn’t clear in its training data, it might just “guess” some probable fact. This guess could be completely wrong, but it might still look like a well-formed and confident answer. This is what’s known as an AI “hallucination.”

The AI isn’t lying on purpose—it’s just filling in the gaps with what it thinks might be right based on the patterns it knows. That’s why it’s so important to be cautious with the information an LLM provides, especially on topics where accuracy is critical (like health, security, legal issues, etc.)

2/ Count with a Cutoff

Large Language Models don’t have real-time access to the internet. They only know what was available up until a certain point, called the “cutoff.” This means they’re not updated with the latest information.

For example, if you ask about a recent event from past days (that most likely happened after the AI’s cutoff date), it won’t know about it. It’s like asking someone about a new movie before they’ve even seen it—they simply can’t tell you anything about it. And as mentioned earlier, AI might fill in gaps with made-up information based on what it thinks is likely.

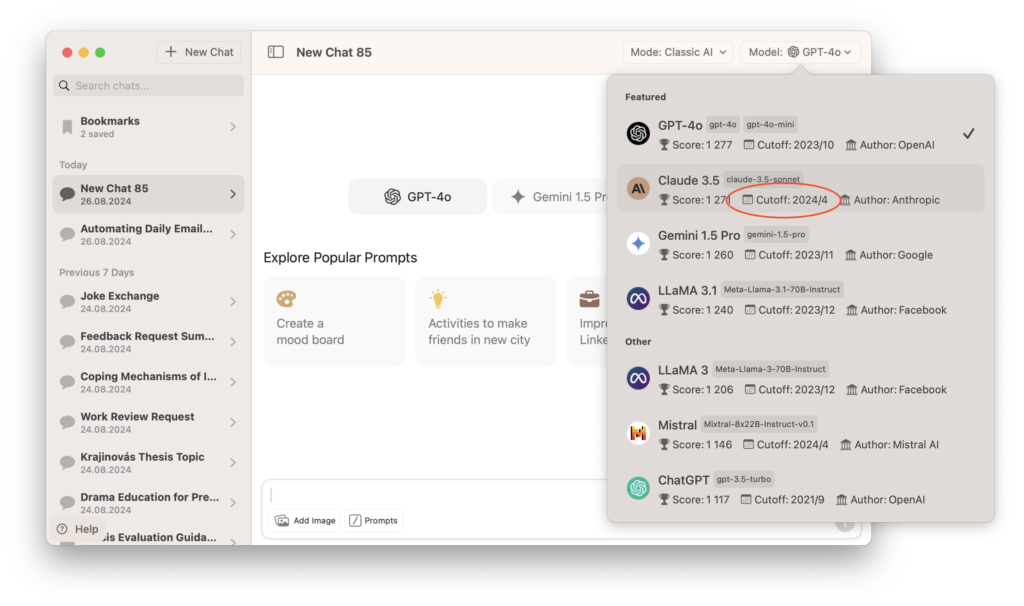

In Fello AI, the cutoff date for each LLM is clearly displayed when you select the model. This is helpful because it reminds you not to rely on the AI for the latest data. Always consider this limitation and verify anything that requires current information.

Understanding this limitation is essential when using LLMs for tasks that need up-to-date information. Here’s a simple trick: upload recent PDF reports, copy-paste articles, or provide links to current sources on the topic. This way, the AI can use the latest data to generate responses, giving you more accurate and relevant information.

3/ LLMs Are Not Good at Math

Think of an LLM like that classmate who was amazing at writing essays with perfect grammar but couldn’t quite grasp math. LLMs excel at generating text and understanding language, but they aren’t so great when it comes to handling numbers or doing calculations.

When you ask an LLM to do math, it will just keep writing text because that’s what it’s designed to do. Even though it might seem contra intuitive, LLMs aren’t made to do precise calculations like a calculator. They can easily make basic math mistakes or give wrong answers to more complex problems.

The reason why LLMs excel at writing code is because programming languages are more similar to traditional languages than math.

If you need accurate math, it’s better to use a tool specifically designed for that purpose. One excellent option is WolframAlpha. This AI is designed to understand and solve a wide range of math-related problems, from simple calculations to complex equations. Unlike LLMs, it’s specifically designed to provide precise and accurate answers in areas like algebra, calculus, statistics, and more.

4/ Provide Clear Instructions

When interacting with an AI, the instructions you give—called “prompts”—are crucial. The clearer and more specific your prompt, the better the AI can respond. A well-crafted prompt guides the AI, reducing the chances of getting a vague or irrelevant answer.

For example, instead of saying, “Tell me about dogs,” you could say, “Give me a brief overview of different dog breeds, focusing on their size and temperament.” This way, the AI knows exactly what you’re looking for and can provide a more targeted response.

Think of it like giving directions. The more precise you are, the easier it is for someone—or in this case, an AI—to get you where you want to go.

To get even better results, consider using some advanced strategies, often referred to as prompt engineering. For instance:

- Include Details: Add context or specific details in your query to get more relevant answers.

- Ask the AI to Adopt a Persona: You can instruct the AI to respond as a specific type of expert, such as a historian or a technical writer.

- Specify the Desired Length: Tell the AI if you want a short summary, a detailed explanation, or something in between.

By refining your prompts using these strategies, you can get responses that are more accurate, relevant, and aligned with what you need.

5/ Don’t Expect Perfect Results on the First Try

A common mistake people make when using AI is expecting the perfect answer right away. They give one prompt and hope the AI will nail it on the first try. But that’s most likely not gonna happen. LLMs like ChatGPT, Claude or Gemini are powerful, but they often need guidance and refinement to produce the best results.

Think of working with an LLM as a collaborative process:

- Start with a broad prompt to kick off the conversation.

- Brainstorm ideas if you’re tackling a complex task, like writing a report.

- Ask the AI to outline the main sections or structure of the task.

- Refine by asking more specific questions to fill in details for each part.

- Iterate and adjust as you go, improving the accuracy and relevance of the output.

By following these steps, you can guide the AI more effectively and achieve better results.

This step-by-step approach lets you shape the AI’s output more accurately. By breaking your task into smaller parts, you can get each section right before moving on. This method improves accuracy and helps fix any misunderstandings along the way.

It’s normal to go back and forth with the AI. You may need to adjust your prompts or add more details to get the right output. Don’t rush the process—good results often come from refining and iterating. Taking the time to work through each step will get you closer to the final result you want.

Conclusión

By following these tips, you’re already ahead of most people who use ChatGPT, Claude, and Gemini. Understanding how these tools work, working in a step-by-step way and giving clear, specific prompts will help you get 100x better results.

Many people get frustrated because they imagine AI as something out of a sci-fi movie, expecting it to do everything perfectly. Now that you know how the Large Language Models really work, you can avoid those unrealistic expectations and use them more effectively.

Don’t forget to subscribe to our newsletter for more AI related tips and tricks, and if you have any further questions or need help with your specific use-case, feel free to contact me at felloai@icloud.com.