Imagine a world where your doctor is a chatbot, your child’s teacher is an AI tutor, and empathy is replaced by efficiency. Sounds futuristic? According to Bill Gates, it’s less than a decade away.

In a widely discussed interview on The Tonight Show, the Microsoft co-founder predicted that artificial intelligence will soon make expert-level medical advice and personalized tutoring “free and commonplace.” But this isn’t just another tech upgrade—it’s a fundamental shift in how we relate to knowledge, care, and each other. It’s not just about replacing inefficiencies; it’s about redefining what it means to be an expert, a caregiver, and even a human.

Is this a breakthrough for humanity or a breakdown of what makes us human?

In this article, we’ll unpack what Gates really means by “free intelligence,” where AI is already transforming health and education, how it may reshape the global labor market, and whether we’re heading toward a golden age of collaboration—or a future where empathy is outsourced to code.

A decade to “free intelligence”?

When Bill Gates spoke about AI making high-quality advice universally accessible, he wasn’t simply imagining a more efficient world. He was describing a future in which access to human-level knowledge no longer depends on geography, income, or even a professional’s availability. He referred to this vision as the era of “free intelligence,” where computing power and cognitive labor become as cheap and ubiquitous as electricity.

To Gates, this is a leap forward in democratizing opportunity. But to many experts in healthcare and education, it’s a dangerous oversimplification. Critics argue that this forecast treats empathy, context, and lived experience as outdated luxuries. What happens when a diagnostic tool misses the subtle clues a seasoned doctor would catch? Or when a student struggling with trauma is handed a chatbot instead of a counselor?

The debate isn’t about whether AI will enter clinics and classrooms. It’s already happening. The question is whether we’ll use AI to empower professionals—or to replace them entirely.

Can algorithms cure?

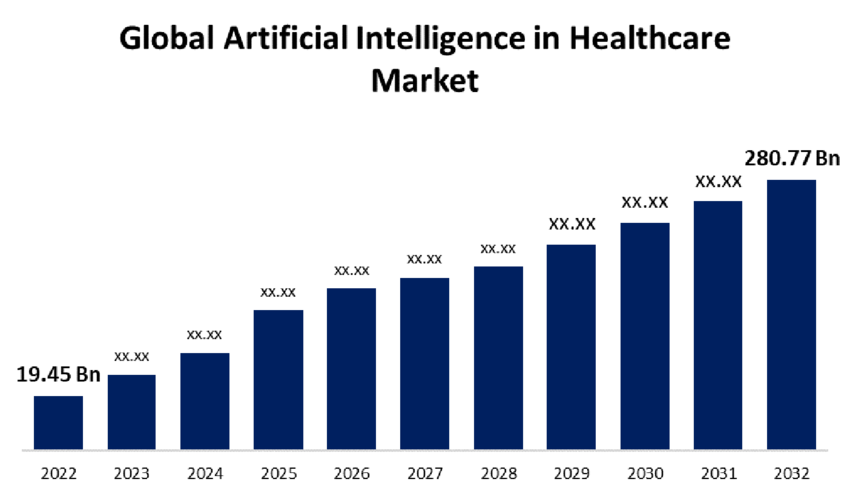

Hospitals have become some of the most fertile testing grounds for artificial intelligence. These environments generate massive amounts of structured and unstructured data, making them ideal for training machine-learning systems. Today, AI tools can identify eye diseases, assist in cancer diagnosis, and triage patients in emergency rooms faster than junior clinicians.

Where AI shines in medicine:

- Pattern recognition: Detecting anomalies in imaging scans and pathology slides

- Predictive analytics: Forecasting sepsis, cardiac events, and ICU readmissions

- Administrative support: Drafting medical documentation, automating referrals, processing billing codes

These advancements are already improving outcomes in some areas. AI systems are helping overloaded radiologists avoid fatigue-related errors. In primary care, AI chatbots are easing the documentation burden that contributes to physician burnout.

However, the limitations are just as striking. AI lacks the intuition to ask follow-up questions, the ability to notice a worried spouse’s body language, or the judgment to navigate ethical grey zones. The World Health Organization has released strict recommendations calling for clear accountability, human oversight, and continual safety assessments when using AI in health settings.

Even longtime AI evangelists like Vinod Khosla have revised their stance. Once bullish about replacing doctors, Khosla now emphasizes augmentation—using AI to handle the data so clinicians can focus on what only they can do: offer comfort, exercise judgment, and build trust.

Partnership, Not Replacement

Still, the pace of change is hard to ignore. As immunologist Dr. Derya Unutmaz put it, “Gates is correct: within a decade, up to 80–90% of doctors, teachers, professors, engineers or lawyers will be replaced by AI.” But he adds a crucial caveat: the remaining professionals won’t disappear—they’ll shift roles. They’ll work alongside AI to guide discoveries, generate new knowledge, and ensure technology continues to benefit humans.

The real transformation lies not in replacement, but realignment. AI may take over repetitive, data-heavy tasks—but it will be up to humans to provide the context, the empathy, and the ethical compass.

The future of medicine isn’t about choosing between humans or machines. It’s about designing a system where the best of both work in tandem.

Gates is correct: within a decade, up to 80-90% of doctors, teachers, professors, engineers or lawyers will be replaced by AI. The remaining professionals will focus on aiding AI to make discoveries, create new knowledge & to keep it aligned to benefit humans in these fields. https://t.co/qaNvDp5DsY

— Derya Unutmaz, MD (@DeryaTR_) April 18, 2025

The classroom gets an AI assistant

Education, like healthcare, has long suffered from uneven access. One-on-one tutoring has consistently been shown to produce the best academic results—but it’s too expensive and labor-intensive to offer at scale. AI is changing that.

Large language models can now simulate tutoring sessions in multiple languages, offer step-by-step explanations, and adapt to a student’s pace. Early studies suggest these tools can close learning gaps when used consistently. Teachers, especially in underfunded districts, are beginning to use AI to generate lesson plans, summarize student performance, and provide feedback on essays.

Still, there are major caveats. The UNESCO policy framework warns that if AI tools are rolled out without safeguards, they could worsen educational inequity. Well-resourced schools will use AI as a supplement; under-resourced ones may try to use it as a replacement.

And that’s where the human element matters. Good teaching is not just about content delivery. It’s about helping students navigate social dynamics, emotional development, and complex moral decisions. AI has no experience to draw from. It can’t pick up on subtle cues like disengagement, bullying, or learning disabilities.

The best-case scenario? AI handles rote tasks while teachers do what machines can’t: coach, mentor, inspire. Some schools are already experimenting with this “co-teacher” model. Early results show promise, but they also highlight the need for strong human guidance.

Economic shockwaves and safety nets

The rise of general-purpose AI is not just a tech story—it’s a labor story. According to a recent Oxford study, nearly half of all work tasks in the U.S. could be automated by 2035. Unlike previous automation waves that targeted manual labor, this one is coming for knowledge workers.

Professionals once thought immune—legal assistants, medical coders, translators—are now seeing core tasks replicated by AI systems. Some jobs will vanish entirely. Others will evolve, requiring workers to master entirely new skills mid-career.

There are two potential paths forward:

- The productivity dividend: Automation frees up human capacity. Governments and companies reinvest those gains into training programs, social safety nets, and reduced workweeks.

- The displacement spiral: Companies slash headcount, GDP rises, but wealth concentrates. Inequality deepens, and societal trust frays.

Microsoft’s AI chief Mustafa Suleyman has called the upcoming shift “hugely destabilizing,” warning that even roles that seem safe today may soon be obsolete. As AI models become more capable, the balance between augmentation and replacement will tilt—and fast.

This is why concepts like universal basic income, portable benefits, and publicly funded upskilling programs are moving from theory to necessity.

Ethics, trust, and the human factor

Trust is the currency of both healthcare and education. When an AI gets it wrong, the consequences can be personal and irreversible. A misdiagnosis. A missed learning disability. A biased recommendation. The ripple effects can be lifelong.

The World Health Organization has outlined a set of 40+ safeguards for implementing AI in sensitive domains. These include requiring audit logs, clear accountability structures, human fallback options, and regular algorithmic reviews.

Still, no regulation can close the empathy gap. An AI might know the right words to say, but it doesn’t feel them. Real rapport, whether between a patient and doctor or a student and teacher, is built on shared experience, vulnerability, and emotional resonance.

Studies show patients are more likely to follow treatment plans when they trust their physician. Students learn better when they feel understood. No algorithm has mastered that yet—and perhaps never will.

Collaboration, not substitution

Gates might be right: we’re entering an era where high-quality knowledge is accessible to everyone. But access isn’t the same as connection.

Doctors and teachers don’t just dispense information. They listen, interpret, empathize, and guide. These are not inefficiencies to be optimized away—they are the foundation of trust-based systems.

If we build AI that enhances—not erases—human roles, we could create something remarkable: a world where professionals are freed from paperwork and empowered to be more present, more intuitive, more human.

The future doesn’t have to be man versus machine. It can be man with machine, working together. But that depends on the choices we make now.

So here’s the question:

- Would you let an algorithm teach your child? Diagnose your illness? Decide what matters?

- Maybe the real question isn’t whether AI can replace us—but whether we should let it.

- And if we don’t want that future—what are we going to do about it?