At Google I/O 2025, the company finally unveiled what might be its most transformative media-generation model to date: Veo 3. With this release, Google doesn’t just take a step forward in AI-generated video—it ends what DeepMind CEO Demis Hassabis calls “the silent era.”

For years, tools like OpenAI’s Sora and Runway‘s Gen-2 have dazzled users with surreal, hyperrealistic video clips generated from just text prompts. But they all shared a glaring limitation: silence. While users could create short clips and scenes, they still needed to manually add soundtracks, voiceovers, or foley effects in post-production. Google’s Veo 3 changes this by introducing native audio-video generation, opening the door to a fundamentally new kind of AI filmmaking.

What Veo 3 Can Actually Do

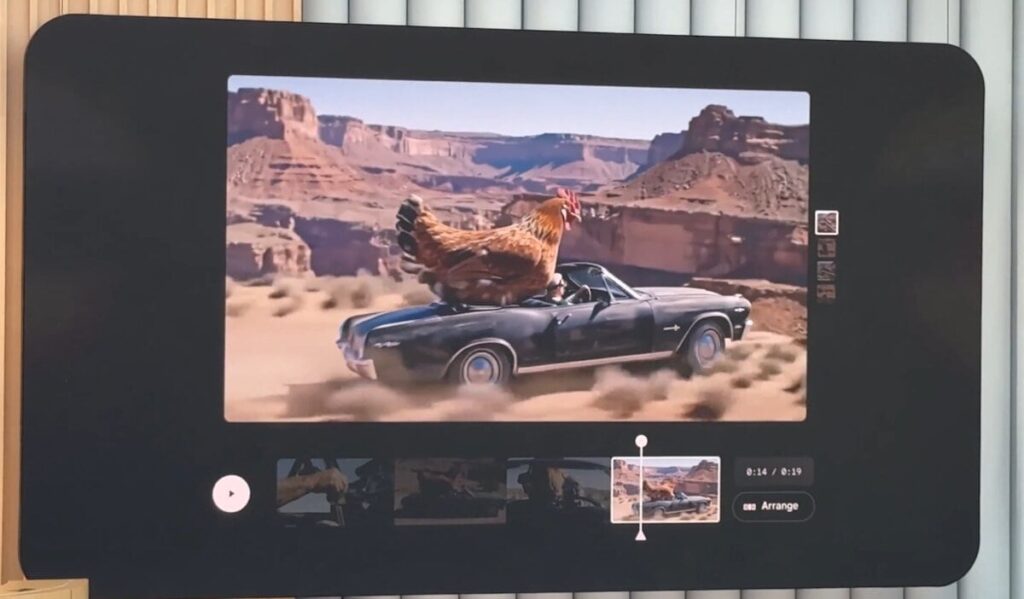

Veo 3 is the third iteration of Google’s text-to-video model, and its most advanced by a wide margin. Unlike its predecessor, Veo 2, which was limited to silent clips with a focus on improved visual realism and camera motion, Veo 3 can generate synchronized audio along with visuals. This includes ambient sounds, background effects like rain or traffic, and most critically—dialogue with matching lip sync.

In demonstrations at I/O, Google showed off forest animals speaking, complete with coordinated mouth movements and forest ambiance. These weren’t just proof-of-concept experiments. They were short, polished, 4K video clips that looked and sounded like the work of a full animation studio.

For creators, this means writing a single prompt such as, “A baby elephant and two llamas talking near a waterfall” can now produce not just visuals, but also believable dialogue, flowing water sounds, and matching lip movements. It compresses the traditional animation and post-production workflow—which can take weeks—into minutes.

I did more tests with Google's #Veo3. Imagine if AI characters became aware they were living in a simulation! pic.twitter.com/nhbrNQMtqv

— Hashem Al-Ghaili (@HashemGhaili) May 21, 2025

Flow, Imagen 4, and Google’s Creative Stack

Veo 3 isn’t a standalone update. It’s part of a broader strategy to unify Google’s AI tools under a usable creative stack. The new “Flow” tool sits at the center of this effort. Built for filmmakers and creators, Flow combines Veo for video generation, Imagen 4 for still image generation, and Gemini for natural language prompting into a single interface.

Within Flow, users can describe scenes in plain language, select or create visual assets, adjust camera angles, and reuse clips from a shared library. Think of it as a no-code film studio. Imagen 4, which also launched at I/O, now produces images up to 2K resolution and handles detailed rendering of textures and even typography. Together, these tools create a foundation for low-friction, AI-first media production.

Flow is available to Google AI Pro and Ultra users in the US, with Veo 3 restricted to the Ultra tier (Ⓣ249.99/month) and enterprise customers via Vertex AI.

Performance Benchmarks and Capabilities

Google shared benchmark data from human-evaluated test sets, suggesting Veo 3 is ahead of its competitors in key areas:

| Metric (MovieBench subset) | Veo 3 win-rate | Nearest rival |

|---|---|---|

| Text-to-Video overall preference | 72 % | Sora 23 % |

| Visual quality | 68 % | Sora 27 % |

| Physics realism | 75 % | Gen-3 Alpha 19 % |

| Audio-video sync | 81 % | Movie Gen 14 % |

In one internal study using the “MovieBench” test suite, Veo 3 achieved a 72% preference rate among human evaluators for overall prompt fulfillment, compared to 23% for OpenAI’s Sora. Veo 3 also scored higher in physics simulation realism and lip-sync accuracy.

However, it’s important to note that clip duration is still limited. While Veo 3 can produce visually rich and high-resolution clips, they’re capped at around 8 seconds within Flow. Longer sequences are possible via enterprise APIs but are not accessible to the general public yet. OpenAI’s Sora still leads in this domain, offering up to 60-second renders.

How Veo 3 Compares to the Competition

As generative video continues to mature, several major players have released their own platforms—each with its own strengths and limitations. While Veo 3 brings groundbreaking innovation in synchronized audiovisual generation, it competes in a diverse field that includes OpenAI’s Sora, Runway Gen-3 Alpha, Pika 2.2, and Meta’s research-only Movie Gen.

Here’s how they stack up:

| Feature | Veo 3 | OpenAI Sora | Runway Gen-3 Alpha | Pika 2.2 | Meta Movie Gen |

|---|---|---|---|---|---|

| Max resolution | 4 K | 1080 p | 1080 p | 1080 p | 1080 p |

| Max length (public) | 8 s Flow / 30 s+ via API* | 60 s | 10 s | 10 s | 16 s |

| Native audio | Yes | No | Post-sync only | Sound-effects option | Yes (research) |

| Lip-sync | Yes | Partial (workaround) | Planned | Beta | Yes |

| Physics fidelity | High | Medium | High | Low-medium | Medium |

| Price (starter) | $249 /mo (Ultra) | $20 /mo (ChatGPT Plus) | $35 /mo (Standard 625 credits) | Free / credit packs | Research only |

OpenAI Sora

OpenAI’s Sora has been a leader in the AI video space, primarily due to its long-duration rendering capabilities and its tight integration with the ChatGPT ecosystem. Sora allows users to create up to 60-second video clips in high resolution, which makes it ideal for storytelling that requires narrative progression and sustained motion. Where Sora shines is in coherence across frames, naturalistic movement, and object permanence, largely thanks to OpenAI’s data pipeline and refinement via user feedback.

However, Sora lacks native audio support. Users must manually add audio post-generation, which introduces friction, especially for scenes that require tight lip-syncing or environmental realism. This is where Veo 3 takes a clear lead: it removes the post-processing layer entirely. While Sora remains a powerful option for visual content creators, its inability to generate synchronized audio makes it less suitable for applications involving dialogue, soundscapes, or fully immersive scenes.

Runway Gen-3 Alpha

Runway has carved out a strong niche among creators with Gen-3 Alpha, focusing on short-form, visually rich video generation. Its main strength lies in user control. Runway offers intuitive tools for adjusting camera paths, lighting, and scene transitions, which gives creators more flexibility in crafting polished visuals. These features are especially useful in content marketing, music videos, and TikTok-style short content, where aesthetics and dynamic camera angles are key.

Despite these advantages, Runway still requires users to add audio manually. It supports lip-syncing tools and audio import features, but the audio pipeline is distinct from the video generator. This disjointed workflow limits the tool’s usefulness for filmmakers or educators who want a full audio-visual experience in one go. Compared to Veo 3, which outputs complete cinematic clips with ambient sounds and dialogue in a single prompt, Runway still feels like a multi-step process.

Pika 2.2

Pika Labs’ Pika 2.2 stands out for its experimental flexibility. It supports a wide range of inputs including text, images, and keyframes. Users can animate static content or extend scenes using motion prompts, which gives it an edge for creators who want to iterate on existing media. Tools like Pikaframes and Pikaffects offer a modular approach to video creation.

However, when it comes to audio, Pika remains limited. While it has introduced basic support for sound effects, these are usually simplistic and not deeply integrated into the video generation process. There is no dialogue generation or sophisticated ambient audio modeling. Additionally, Pika lags behind in rendering quality, realism, and physics simulation. For quick social content or casual creative use, Pika is still valuable. But for high-end, audio-visual synchronized output, Veo 3 offers a significantly more advanced and polished product.

Meta’s Movie Gen

Meta’s Movie Gen is perhaps the closest theoretical competitor to Veo 3 in terms of ambition. As a research tool, it demonstrated synchronized audio and video generation months before Veo 3’s launch. Meta has focused on realistic speech synthesis, character behavior modeling, and contextual soundscapes. In controlled demos, Movie Gen produced coherent dialogue and realistic scenes with high temporal consistency.

The issue is accessibility. Movie Gen is not available for public or commercial use. It exists within Meta’s research labs and has not been productized or offered as a developer API. As such, while its technical capabilities may rival Veo 3, they remain out of reach for actual users. Veo 3, on the other hand, is already live through Flow and Vertex AI, which gives Google a major head start in terms of market traction, feedback loops, and creator adoption.

Real Use Cases Already Emerging

Despite current limitations in clip duration and its initial availability only to U.S.-based users through the Ultra subscription tier, Veo 3 is already proving to be a useful tool across a variety of industries. Its ability to generate complete video scenes—including sound effects, ambient audio, and dialogue—makes it especially attractive for professionals and creators working under tight budgets or compressed timelines.

Filmmaking and Storyboarding

Veo 3 offers a powerful tool for creating animatics and visual prototypes of scenes before entering full production. Directors can input a simple scene description and receive a fully realized clip with synchronized audio, voice lines, and camera movements.

This allows filmmakers to test tone, pacing, and even dialogue delivery early in the creative process, which historically required significant manual work or collaboration with previsualization teams.

Independent filmmakers with limited budgets can now generate scenes that previously demanded a full team of animators, voice actors, and sound designers.

Advertising and Marketing

Veo 3 provides agencies with the ability to turn text-based ideas into polished, short-form promotional clips in a matter of minutes. A marketer can input a prompt like “A sneaker slamming onto a basketball court, with hip-hop music and crowd cheering” and receive a cinematic-style clip that needs minimal editing.

With the ability to render at 4K quality and generate branded voiceovers directly from a prompt, Veo 3 could significantly reduce both turnaround time and production costs.

Google reached out to me to create a short film for Google I/O 2025 using Flow, their new AI filmmaking tool. It’s been a magical experience collaborating with one of the most visionary tech teams in the world.@Google #GoogleIO

— Junie Lau (@JunieLauX) May 21, 2025

The result is Dear “Stranger”, a film that… pic.twitter.com/nvyDWhxwop

Educational Content

Veo 3 also presents clear value for educators and institutions looking to produce engaging educational content without relying on stock footage or large media teams. A teacher could prompt the system with something like “A virtual cell explaining how DNA replicates, in a friendly voice” and receive an animated sequence with matching audio and visuals.

Game Development and Cutscenes

In the indie gaming scene, studios often struggle to balance creative vision with production constraints. Veo 3 can help developers quickly create cinematic intros, character moments, or lore-based storytelling segments without needing to hire separate voice actors or animators.

For example, a small team could create a narrated backstory scene for a new game character with just a written prompt, adding a layer of professionalism to their project that would otherwise be out of reach.

Created with Google Flow.

— Dave Clark (@Diesol) May 21, 2025

Visuals, Sound Design, and Voice were prompted using Veo 3 text-to-video.

Welcome to a new era of filmmaking. pic.twitter.com/E3NSA1WsXe

These aren’t hypothetical possibilities. Google has already engaged with early adopters like Dave Clark, who created shorts such as “Battalion” and “NinjaPunk,” and filmmaker Junie Lau, known for personal and relationship-driven storytelling. Both have used Flow and Veo to prototype or finalize projects, demonstrating that the tool is ready for more than just experimentation—it’s already being deployed in real workflows.

Watch Battalion—My First Ever 5-Minute Gen AI Short Film. I had to upload it in two parts. This is part 1; part 2 is in the comments.

— Dave Clark (@Diesol) September 26, 2024

I created this over a few weeks using 100% image-to-video and text-to-video Generative AI. While not perfect, the tools advance daily, even… pic.twitter.com/MJC26Hn8Eu

Deepfakes, Security, & Ethics

Veo 3 introduces a new level of realism in AI-generated video. For the first time, a single model can produce visuals and synchronized, natural-sounding dialogue. This unlocks new creative possibilities—but also new risks.

Deepfakes have evolved from crude, easily debunked forgeries into highly convincing simulations. Veo 3 allows for rapid creation of fake videos where people appear to speak convincingly, in their own voices and mannerisms. The danger of misinformation, hoaxes, and impersonation increases significantly.

Real-World Risks

One major concern is the impersonation of public figures. A realistic fake video can cause political damage, spark misinformation, or incite real-world harm. The barrier to creating such content has now dropped to just a prompt.

There’s also job displacement. Tools like Veo 3 automate tasks previously done by animators, voice actors, and video editors. A recent study projected over 100,000 jobs in media production could be affected by AI-generated content by 2026.

Google’s Built-in Protections

Google has included a range of protections:

- SynthID Watermarking invisibly marks both video and audio outputs

- Prompt-level moderation blocks harmful requests at generation time

- Scene classifiers and auditing tools monitor content in enterprise deployments

- SynthID Detector helps users identify whether media was made with Veo

These tools aim to balance innovation with responsibility, but they’re not foolproof.

Enforcement Gaps

SynthID works only if platforms adopt it. Currently, few major video sites scan for or enforce such markers. Bad actors can re-encode videos or strip metadata to remove these traces.

There’s also no global legal framework in place. The EU is working on laws like the AI Act, but most countries have no regulation around synthetic media, leaving a patchwork of standards.

A Shared Responsibility

Google alone can’t manage the risks. Platforms need built-in detection systems. Creators should disclose AI-generated content. Governments must define rules and enforce them. Until that ecosystem exists, even well-designed tools like SynthID will have limited impact.

Trust in visual media is already weakening. As AI-generated content becomes harder to distinguish from real footage, verifiable proof of authenticity will become essential.

Where This is All Headed

Veo 3 is not the end point—it’s a marker. Google plans to extend video length, offer interactive editing within YouTube Shorts, and integrate real-time generation pipelines. These features would support longer storytelling formats, such as episodic content or serialized narratives, making Veo not just a prototyping tool but a full production pipeline.

Google’s integration strategy is clear. With Veo for video, Imagen for visuals, Gemini for scripting and prompting, and Lyria for music, the company is assembling a comprehensive toolkit for generative media creation. These tools aren’t built in isolation—they’re designed to work together in Flow, creating a seamless environment where users can manage characters, environments, dialogue, camera moves, and score.

This is about more than convenience. It’s vertical integration of creative media. Google is positioning itself to be the Adobe, Pixar, and Final Draft of the generative era—all in one. If Flow becomes the industry standard, it could redefine how film, advertising, and gaming content is created.

In parallel, we’re likely to see expansion into real-time content generation. This means live generative performances or interactive streaming, where audiences influence the story and the AI responds dynamically. Educational platforms, virtual influencers, and simulation-based training programs may adopt such capabilities rapidly.

This isn’t just a tool for creators. It’s a threat to the traditional media production process. AI-generated content that once took teams of animators, editors, and sound engineers can now be delivered from a single prompt. If Veo continues evolving at this pace, we may soon see the first AI-generated feature film with no human crew involved.

And this time, it won’t be silent.