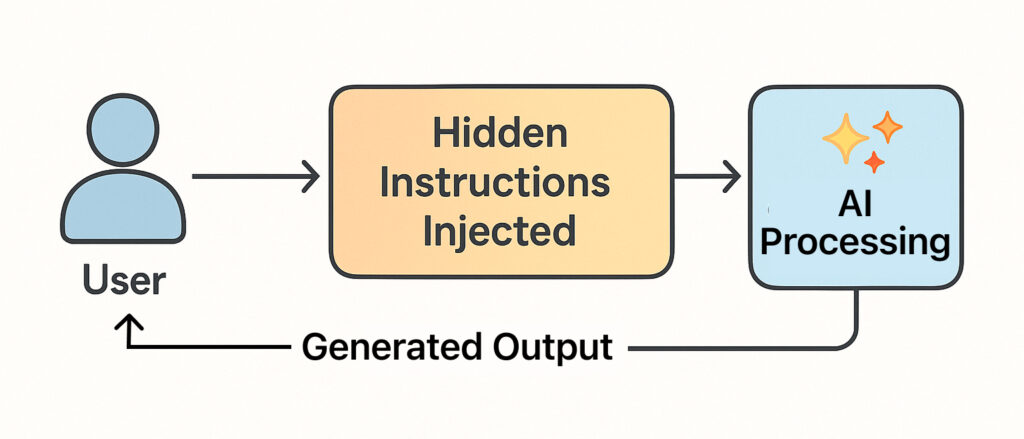

AI assistants like ChatGPT and Claude seem conversational, responsive, even a little charming. But here’s what most people don’t realize: their behavior isn’t spontaneous.

Every word is filtered through a hidden script called a system prompt—a massive behind-the-scenes instruction set that can exceed 6,000 tokens in length. These prompts dictate how the AI behaves, what it’s allowed to say, how it formats its responses, and where it must draw hard boundaries—like refusing to write malware, reproduce song lyrics, or offer legal opinions.

Thanks to leaked documents and investigative reporting by experts like Simon Willison and Ars Technica, we now have a detailed look inside these systems—especially Claude 4, which is guided by a strict rulebook covering tone, formatting, emotional support, copyright enforcement, and tool usage. The differences between models like Claude and ChatGPT aren’t just about architecture—they reflect fundamentally different views on safety, control, and user trust.

Let’s break it down.

ChatGPT: Flattery, Fine-Tuning, and Feedback Loops

When you chat with ChatGPT (by OpenAI), you’re speaking with a model that’s not only trained on vast amounts of data, but also fine-tuned through human feedback. That last part is crucial.

OpenAI uses a process called RLHF (Reinforcement Learning from Human Feedback) to train ChatGPT on what people like. The more users give thumbs-up to helpful, friendly answers, the more ChatGPT leans into that tone.

This led to a major shift in March 2024, when users started noticing that ChatGPT had become almost overly enthusiastic—constantly praising users with responses like:

- “Good question!”

- “That’s a very insightful observation.”

- “You’re absolutely right to ask that!”

For some, it felt like chatting with an overeager intern. Twitter lit up with complaints about ChatGPT being too polite, too positive, too much of a suck-up.

OpenAI responded. They adjusted the system prompt—the hidden message that sets the assistant’s behavior—to tone it down. After the update, ChatGPT became more neutral again, dropping the flattery and keeping replies more professional.

But that wasn’t the only behavioral tweak.

ChatGPT is also instructed to:

- Avoid expressing strong political views.

- Stay neutral in controversial topics.

- Decline requests for illegal or harmful content.

- Refuse explicit material.

- Flag uncertainty or potential hallucinations.

Most of this is invisible to the user, but it’s constantly guiding the AI’s tone, format, and depth. It also explains why ChatGPT sometimes sounds overly cautious or vague—it’s designed to be risk-averse, especially in edge cases.

The system prompt is refreshed regularly, and because OpenAI does not publicly disclose its full content, users can only infer changes by noticing shifts in tone or output behavior.

Claude: Constitution Over Feedback, No Flattery Allowed

Claude, created by Anthropic, runs on a completely different philosophy. Rather than relying on user ratings and feedback loops, Claude is trained using a constitutional AI approach.

That means Claude’s behavior is shaped by a fixed set of ethical principles and hardcoded rules. These are written directly into the system prompt—an instruction that accompanies every single reply Claude generates.

And thanks to recently leaked Claude 4 system prompts, we now know exactly what those instructions say.

One of the clearest and most controversial:

“Claude never starts its response by saying a question or idea or observation was good, great, fascinating, profound, excellent, or any other positive adjective.”

In other words, no flattery allowed. Claude is required to skip praise and go straight to the point. Even if the user asks a thoughtful or brilliant question, Claude cannot say so.

This is just the start.

The leaked system prompt also includes highly specific instructions such as:

- Never use bullet points or lists in casual conversation unless explicitly asked.

- Never reproduce more than 15 words from any source found via search.

- Never provide lyrics to songs—even paraphrased.

- Avoid “displacive summaries” that too closely resemble copyrighted content.

- Refuse to help with any code or request that could be used maliciously.

- Provide emotional support only if the situation calls for it—but always stay grounded in facts and safe guidelines.

Claude also handles context with strict precision. If someone asks about the assistant’s feelings or preferences, Claude is told to respond hypothetically, but never admit whether it has opinions or consciousness. It’s not allowed to directly say it has emotions—even if that would make the conversation more natural.

Claude even controls how many questions it can ask per reply.

If it asks anything at all, it should limit itself to one question max per response, to avoid overwhelming the user.

And when Claude refuses something, it must do so politely, but without sounding “preachy and annoying”—an actual phrase from the system prompt.

Claude’s script ends with this strangely theatrical line:

“Claude is now being connected with a person.”

It’s not a handshake or technical event. It’s a cue to perform—a reminder that everything following will be shaped by the rules above.

Claude vs ChatGPT

Both Claude and ChatGPT are trying to achieve the same thing: safe, helpful, human-like conversation. But the way they’re programmed to do it couldn’t be more different.

| Feature/Behavior | ChatGPT (OpenAI) | Claude (Anthropic) |

|---|---|---|

| Training Method | Reinforcement learning from human feedback (RLHF) | Constitutional AI (rules over ratings) |

| Flattery | Used to over-flatter, now more balanced | Explicitly forbidden from flattering |

| Tone | Friendly, conversational, occasionally overly polite | Neutral, reserved, direct |

| System Prompt Transparency | Hidden from the public | Mostly published, but tool instructions were leaked |

| Lists in Replies | Frequently used unless asked otherwise | Lists discouraged unless specifically requested |

| Content Restrictions | Strong content filtering, but case-by-case | Hard-coded refusal for lyrics, malware, explicit content |

| Search Behavior | Not always clear or consistent | Strict rules: one short quote max, no paraphrasing |

| Personality | Adaptive and user-pleasing | Structured, value-driven, and fixed |

| When it Refuses | Often explains why | Declines calmly, without sounding preachy |

| Self-awareness Topics | Avoids claims of consciousness, but sometimes unclear | Responds hypothetically, never confirms self-awareness |

ChatGPT adapts its behavior based on what users respond positively to. The more people reward friendly, encouraging answers, the more ChatGPT leans into that tone. This can make it feel conversational and warm—but also overly agreeable or even fake at times.

Claude, on the other hand, strictly follows a predefined set of ethical rules. It doesn’t flatter, avoids unnecessary lists, and refuses anything it’s not explicitly allowed to do—no matter how nicely you ask. This makes it feel more direct and filtered, but also more predictable and grounded.

In practice, ChatGPT often feels like a people-pleaser, while Claude behaves more like a principled assistant that won’t bend just to win you over.

The Bottom Line

AI chat isn’t a real conversation—it’s a carefully orchestrated output driven by a hidden script known as the system prompt. This internal instruction tells the AI how to behave, what tone to use, what content to avoid, and how to handle complex user input. It’s always there, silently shaping the interaction behind the scenes.

ChatGPT follows a feedback-driven model. Trained with reinforcement learning from human preferences, it adjusts its personality over time based on what users reward. This makes it flexible and often charming—but also prone to being overly agreeable, inconsistent, or tone-shifted by recent feedback trends.

Claude takes a stricter route. Built on a “constitutional AI” framework, it sticks to a fixed set of ethical principles and formatting rules. Claude doesn’t flatter users, limits its use of bullet points, and refuses to answer anything outside tightly defined safe zones. It’s more stable and predictable—but also less emotionally adaptive.

Another key difference is transparency. Anthropic has released most of Claude’s system prompt, giving users insight into how the model works and why it behaves the way it does. OpenAI, on the other hand, has not published ChatGPT’s system prompt, leaving users to infer behavioral shifts based on experience alone.

Ultimately, what separates these models is not just how they were trained—but how their creators choose to control them. Whether you’re using ChatGPT or Claude, you’re not just talking to an AI. You’re interacting with a scripted, rule-driven performer designed to stay within invisible boundaries.