Meta’s LLaMA series keeps improving, especially with LLaMA 3.1, the latest version of its large language model.

LLaMA 3.1 (Large Language Model Meta AI 3.1) introduces enhanced and magical capabilities, boasting a staggering 405 billion parameters. It also performs better in natural language processing and multimodal tasks.

The artificial intelligence market is growing faster, and the industry’s value is projected to increase by over 13x over the next six years. People who don’t adopt or learn about these tools now will be left behind.

This comprehensive guide will explore LLaMA 3.1’s features, history, applications, and future prospects and compare it to other leading AI models in the market. Let’s enjoy the ride because, by the end of the article, you will have all the basic knowledge on this model, what it means, and how it can be a helpful tool for you.

Overview of LLaMA 3.1

LLaMA 3.1 is a state-of-the-art open-source generative AI model developed by Meta. It is designed to excel in a wide range of tasks, including text generation, image recognition, and coding assistance.

This model is built to be multimodal, meaning it can seamlessly process and generate content across various formats, including text, images, audio, and video.

The model has a large context window and a knowledge cutoff date of December 2023. Therefore, the model can provide accurate and relevant responses, again making it a powerful tool for many use cases.

Key Specifications

- Model Size: LLaMA 3.1 features a substantial parameter count of over 405 billion parameters, making it one of the largest language models available. This extensive size allows it to capture complex language patterns and generate nuanced text quite easily compared to others.

- Context Window: The model supports a context window of 128,000 tokens, enabling it to maintain coherence over lengthy interactions and manage extensive conversations effectively. This is particularly beneficial for applications that require detailed and continuous dialogue, like special agents and chats.

- Knowledge Cutoff: LLaMA 3.1’s knowledge cutoff date is December 2023. This ensures that it incorporates the latest information and provides a very closely relevant response, making it particularly helpful for users seeking current data.

- Multimodal Capabilities: The model can process and generate content across multiple formats, including text, images, and audio.

- Users can generate:

- Text: Articles, reports, and marketing copy that can be used in blogs, newsletters, and social media.

- Images: Visual content based on descriptive text.

- Audio: Voiceovers and soundscapes for multimedia presentations, like videos and interactive content.

- Video: Short video clips or animations that combine text and visuals for storytelling.

- Users can generate:

Company Background

Meta, Facebook’s parent company, has been at the forefront of AI research and development for years. The company began its journey with its launch in 2004 and later focused on machine learning techniques to enhance its social media platforms.

One of the earliest examples of this was the introduction of the News Feed algorithm in 2006, which utilized machine learning to personalize user content based on their interactions.

Over the years, Meta has continued to innovate, launching several AI-driven features across its platforms, including facial recognition technology in 2010 and the automatic translation feature in 2015, which leveraged neural machine translation to improve communication across languages.

In 2018, Meta made significant strides with the introduction of PyTorch, an open-source machine-learning library that has become a cornerstone for AI research and development today.

This library facilitated the development of numerous AI models and applications, including those used in computer vision and natural language processing.

Fast-forward to 2020, and Meta has established itself as a key player in the AI space, focusing on creating responsible AI systems. Their introduction of the LLaMA series is not surprising; it is part of a broader strategy to keep them at the forefront with other players in advanced AI technologies.

Notably, LLaMA is an open-source model, and Meta aims to foster collaboration among developers and researchers. As everyone has access to the resources, this will encourage innovation and rapid advancements in AI.

This initiative aligns with CEO Mark Zuckerberg’s vision of creating a more open and inclusive AI ecosystem, similar to Linux’s impact on operating systems.

Capabilities of LLaMA 3.1

LLaMA 3.1 pushes the boundaries of what is possible in natural language processing and generation. Its advanced capabilities make it a versatile tool for a wide range of applications:

- Unparalleled Language Understanding: LLaMA 3.1 excels at comprehending complex and ambiguous language because of its extensive training on a diverse corpus of text data. The model can quickly grasp nuanced meanings, detect context, and handle idioms and colloquialisms, enabling it to engage in more natural and human-like conversations.

- Multilingual Proficiency: LLaMA 3.1 supports eight languages, including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, and even more that has yet to be officially announced. This multilingual capability allows users from diverse linguistic backgrounds to interact with the model seamlessly.

- Reasoning and Inference: LLaMA 3.1 is designed to go beyond surface-level understanding and engage in deeper reasoning. The model can draw inferences, make logical connections, and provide well-reasoned responses to complex queries. This capability is instrumental in applications that require analytical thinking, such as research, decision-making, and problem-solving.

- Multimodal Integration: While many language models focus solely on text, LLaMA 3.1 is built to handle multimodal inputs, including images and audio. The model can process and generate content across these formats and effectively understand and respond to multimedia information.

- Adaptability and Customization: One of LLaMA 3.1’s key strengths is its flexibility and adaptability. The model can be fine-tuned to specific datasets or tasks, allowing it to specialize in domains such as healthcare, finance, or education. This customization enables developers to tailor LLaMA 3.1 to their unique needs and create highly specialized AI applications.

- Ethical and Responsible AI: Meta has strongly emphasized developing LLaMA 3.1 as an ethical and responsible AI model. The model incorporates safety measures to prevent the generation of harmful or biased content and is designed to respect user privacy and data security. Organizations and individuals who prioritize ethical practices find this really helpful in standing firm to their ethos.

- Scalability and Performance: LLaMA 3.1 is built to handle large-scale applications and high-volume data processing. The model’s efficient architecture and optimized training process enable it to deliver fast and accurate responses, even when dealing with massive amounts of information.

- Open-Source Accessibility: By releasing LLaMA 3.1 as an open-source model, Meta aims to democratize access to advanced AI technology. This allows developers and researchers worldwide to experiment with, improve upon, and build innovative applications using LLaMA 3.1. The open-source approach ensures that LLaMA 3.1 is accessible to a broad audience.

History of the LLaMA Model Family

LLaMA 3.1 is part of a lineage of models that began with LLaMA 1.0. Each iteration has introduced improvements in performance, safety, and usability:

- LLaMA 1.0: The initial model, which was launched on February 24, 2023, focused on establishing a strong foundation for natural language processing and understanding, setting the stage for future advancements.

- LLaMA 2.0: This version was released on July 18, 2023. It introduced enhanced text generation and language understanding capabilities, achieved higher accuracy in various benchmarks, and expanded the range of supported languages.

- LLaMA 3.0: This was released in early 2024. It marked a significant leap in performance, particularly in reasoning tasks and coding capabilities. It featured improved context handling and was trained on a more diverse dataset.

- LLaMA 3.1: The latest iteration, LLaMA 3.1, builds on its predecessors by offering improved performance, expanded language support, and enhanced safety measures. It has been tested against various benchmarks, including the Massive Multitask Language Understanding (MMLU) test, where it achieved scores exceeding 85% in general knowledge across multiple subjects.

Use Cases for LLaMA 3.1

LLaMA 3.1 is a versatile AI model that can be applied across various domains, showcasing its adaptability and power in real-world applications. Below are some key use cases that highlight the model’s capabilities:

- Content Creation: LLaMA 3.1 generates high-quality written content, making it an invaluable asset for writers, marketers, and content creators. It can produce articles, reports, blog posts, and marketing copy, significantly reducing the time and effort required for content production. With it, users can easily maintain a consistent voice and style across their materials.

- Creative Applications: The model’s image generation capabilities enable users to create compelling visuals based on textual descriptions. This feature is particularly useful for graphic designers, advertisers, and artists who need to produce unique visuals for campaigns, social media, or other creative projects. Users can also use it to enhance their storytelling through rich, engaging imagery that complements their written content.

- Education: LLaMA 3.1 can be utilized in educational settings to develop interactive learning materials, quizzes, and presentations. Educators can create personalized learning experiences by generating tailored content that addresses their students’ specific needs.

- Customer Support: Businesses can integrate LLaMA 3.1 into their customer service platforms to deliver efficient, personalized support. The model’s natural language understanding capabilities enable it to handle various customer inquiries, providing accurate answers and resolving issues quickly. Businesses can automate responses to frequently asked questions to enhance customer satisfaction and free up human agents for more complex tasks.

- Software Development: LLaMA 3.1 is a valuable resource for developers looking to streamline their workflows, just like Claude. The model can assist in writing, debugging, and optimizing code, making it easier for programmers to tackle coding challenges. It can help reduce time spent on repetitive tasks, allowing developers to focus on more strategic aspects of their projects.

- Global Communication: With its multilingual processing capabilities, LLaMA 3.1 facilitates communication across diverse cultures and languages. This feature is particularly beneficial for international businesses that need to engage with customers and partners from various linguistic backgrounds.

- Mental Health and Wellbeing: LLaMA 3.1 can be employed in mental health applications. It can provide support through chatbots or virtual therapists. It can generate empathetic responses and offer coping strategies that can assist users in managing stress, anxiety, or other mental health challenges.

- Research and Data Analysis: Researchers can leverage LLaMA 3.1 to analyze large datasets and generate insights. The model can assist in summarizing research findings, drafting reports, and even formulating hypotheses based on existing literature. It is a valuable tool for academics and professionals in various fields. However, it is worth noting that the model currently can’t browse the Internet for an instant answer but rather relies on its trained datasets.

Technical Specifications

LLaMA 3.1 is built on a powerful neural network architecture that enables it to process and generate text efficiently. The model’s technical specifications include:

Model Architecture: LLaMA 3.1 utilizes a decoder-only transformer architecture, which is renowned for its ability to handle long-range dependencies and parallel processing. This architecture allows the model to process input data and generate coherent outputs efficiently.

- Transformer Blocks: The model is composed of multiple transformer blocks, each containing:

- Multi-Head Attention Mechanism: This allows the model to focus on different parts of the input text simultaneously, capturing a wide range of contextual information essential for understanding complex sentence structures and nuanced meanings.

- Feedforward Neural Network: Each block includes a feedforward network that transforms the output from the attention mechanism. This introduces non-linearity and enhances the model’s ability to capture intricate patterns in the data.

- Positional Encoding: To maintain the order of words in a sequence, LLaMA 3.1 employs positional encoding, which adds unique vectors to each token’s embedding based on its position. This enables the model to understand the relative positioning of words, which is crucial for language comprehension.

Parameter Count: LLaMA 3.1 features an impressive 405 billion parameters, which allows it to achieve sophisticated language comprehension and generation capabilities.

Context Window: The model supports a context window of 128,000 tokens, allowing it to maintain coherence over lengthy interactions.

Processing Speed: LLaMA 3.1 is optimized for high-speed processing, ensuring timely and responsive outputs even for complex tasks. The model’s architecture is designed to maximize computational efficiency, allowing it to generate responses quickly, which is helpful for real-time applications.

Safety Measures: LLaMA 3.1 incorporates advanced safety protocols to prevent generating harmful or biased content. Key safety features include:

- Content Filtering: The model employs sophisticated filtering techniques to identify and exclude inappropriate or harmful content during generation.

- Bias Mitigation Techniques: LLaMA 3.1 has been trained to reduce biases present in the training data, ensuring that the outputs are fair and representative.

Training Infrastructure: LLaMA models are trained on a distributed system of Nvidia H100 GPUs, which are specialized AI accelerators designed to handle extensive machine-learning tasks efficiently. The training process involves:

- Pre-training and Fine-tuning: LLaMA 3.1 undergoes a two-stage training process. During pre-training, the model is exposed to a massive corpus of text data, learning to predict the next word in a sentence. Fine-tuning involves adapting the pre-trained model to specific tasks using smaller, task-specific datasets.

- Efficient Training Techniques: To optimize training efficiency, LLaMA 3.1 employs techniques such as mixed-precision training and gradient checkpointing. Mixed-precision training utilizes lower-precision arithmetic to speed up computations and reduce memory usage without sacrificing accuracy. Gradient checkpointing saves memory by storing only certain activations during the forward pass, recomputing them during the backward pass as needed.

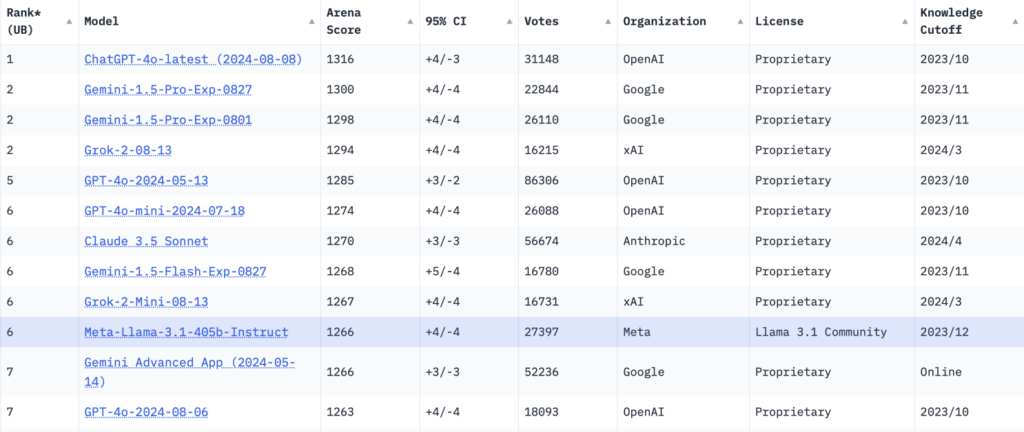

Performance Evaluation: LLaMA 3.1’s performance is rigorously evaluated using various benchmarks that test its language understanding and generation capabilities. The model consistently outperforms previous and state-of-the-art versions on machine translation, summarization, and question-answering tasks. Extensive human evaluations reveal that LLaMA 3.1 is competitive with leading models like GPT-4 and Claude 3.5 Sonnet, demonstrating its state-of-the-art capabilities across many real-world scenarios.

Comparative Analysis

Here is a comparative analysis of LLaMA vs ChatGPT, Claude, and Gemini.

LLaMA 3.1 vs. ChatGPT

- Model Size: LLaMA 3.1 boasts 405 billion parameters, while ChatGPT (specifically GPT-4) has around 175 billion parameters. Despite ChatGPT’s smaller size, it is highly optimized for conversational tasks and often delivers more sophisticated dialogue.

- Performance on Benchmarks: LLaMA 3.1 has shown remarkable performance in various benchmarks, often outperforming ChatGPT in specific tasks like mathematical reasoning and code generation. However, ChatGPT excels in conversational fluency and context retention, particularly in informal settings.

- Customization and Accessibility: LLaMA 3.1 is open-source, allowing developers to fine-tune the model for specific applications and domains. In contrast, ChatGPT is proprietary, limiting customization options but providing a user-friendly interface through platforms like OpenAI’s API.

- Offline Usability: LLaMA 3.1 can be deployed offline, making it suitable for applications with limited internet connectivity or data privacy concerns. ChatGPT, however, requires an internet connection to access OpenAI’s servers.

LLaMA 3.1 vs. Claude AI

- Parameter Count: LLaMA 3.1’s 405 billion parameters significantly surpass Claude 3.5 Sonnet‘s 175 billion parameters. This larger size enables LLaMA to process more complex queries and generate richer content.

- Context Window: Both models support extensive context windows, with LLaMA 3.1 allowing for 128,000 tokens. Claude 3.5 Sonnet can handle a similar context window, but it is particularly optimized for maintaining conversational context over extended interactions, making it more effective in dialogue-heavy applications.

- Multimodal Capabilities: While both models support multimodal interactions, Claude AI has advanced visual reasoning capabilities that allow it to interpret and generate images more effectively than LLaMA 3.1.

- Performance in Coding Tasks: In coding assistance, LLaMA 3.1 has demonstrated high accuracy in generating functional code snippets. However, Claude 3.5 Sonnet has been noted for its superior performance in complex coding challenges and debugging tasks, making it a preferred choice for software engineers.

LLaMA 3.1 vs. Gemini 1.5 Pro

- Model Size: LLaMA 3.1’s 405 billion parameters make it one of the largest language models, compared to Gemini 1.5 Pro, which has over 200 billion parameters. This size advantage allows LLaMA to handle more intricate language tasks.

- Context Window: Both models feature a context window of 128,000 tokens, enabling them to maintain coherence over lengthy interactions. This similarity allows for the effective handling of extended conversations and complex queries.

- Multimodal Interaction: Gemini 1.5 Pro excels in multimodal capabilities, particularly in generating images from text descriptions. While LLaMA 3.1 has some image generation capabilities, they are more limited than Gemini’s advanced features.

- Real-World Applications: Gemini 1.5 Pro has been integrated into various Google products, enhancing their functionality with AI-driven features. In contrast, LLaMA 3.1’s open-source nature allows for broader experimentation and customization by developers, fostering innovation in diverse applications.

Summary of Comparisons

In summary, while LLaMA 3.1 stands out for its large parameter count and open-source accessibility, it faces competition from ChatGPT, Claude AI, and Gemini 1.5 Pro in various aspects:

- ChatGPT excels in conversational fluency and user-friendliness but lacks the customization options available with LLaMA.

- Claude AI offers advanced visual reasoning and coding assistance but operates within a proprietary framework.

- Gemini 1.5 Pro provides strong multimodal capabilities and integration with Google services, while LLaMA 3.1’s open-source model encourages community-driven innovation.

Ultimately, the choice between these models depends on specific use cases, desired features, and the level of customization required by developers, users, and organizations.

Future Outlook

The future of LLaMA AI looks promising, with ongoing developments aimed at further enhancing its capabilities. Upcoming releases like LLaMA 4.0 are expected to introduce even more advanced features, such as improved multimodal reasoning, enhanced memory capabilities, and expanded language support.

FAQs

What can LLaMA AI do? LLaMA 3.1 can generate text, images, audio, and video. It is a versatile tool for various applications, including content creation, coding assistance, and customer support.

Who is behind Meta AI LLaMA 3.1? / Who owns LLaMA 3.1? LLaMA 3.1 is developed and owned by Meta, the parent company of Facebook.

When is LLaMA 4.0 coming? Meta has not yet announced a specific release date for LLaMA 4.0, but it is expected to be launched later this year or early next year. It will build upon the success of LLaMA 3.1 and introduce even more advanced features and capabilities.

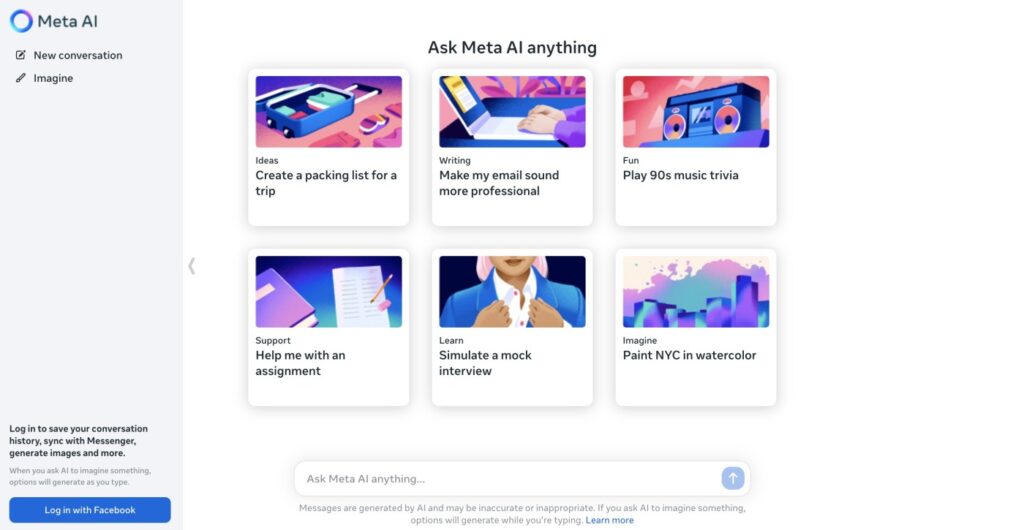

How to download LLaMA AI? LLaMA 3.1 is available for direct download, and users can also access it on the web, which provides an interface for interacting with the model.

Where to use LLaMA AI? Users can leverage LLaMA 3.1 on platforms like Fello AI, which allows for easy access to the model’s capabilities without the need for extensive setup or technical knowledge.