For the past few years, Midjourney, the image generation tool used by over 20 million creatives, has set the benchmark for stunning AI-generated images. Its distinct artistic style, easy-prompting, and fast evolution made it the favorite tool for designers, storytellers, and creatives around the world. But until now, there was one limitation: it could only generate images. That’s about to change — in a very big way.

As tools like Sora, Veo 3, and Pika push AI video into the mainstream, Midjourney is officially teasing its own long-awaited video generation feature. No longer limited to still frames, the company is preparing to enter the competitive world of generative video — and if the initial previews are any indication, it could be one of the most important breakthroughs in creative technology this year.

So what exactly is coming, and why does it matter so much?

Let’s break it down.

What Will Midjourney Video Do in Version 1?

Midjourney’s video generation feature is being released in carefully planned stages, starting with a limited experimental rollout. This first version won’t be packed with every advanced feature — but it will offer a meaningful first look at the company’s vision for AI-generated video.

Image-to-Video as the Starting Point

Rather than diving straight into prompt-to-video generation like OpenAI’s Sora or Google’s Veo 3, Midjourney will begin with an image-to-video workflow. Users will be able to select one of their previously generated Midjourney images and convert it into a short animated video.

Think of it like breathing life into a still frame — using AI to simulate motion, depth, and atmosphere while staying true to the image’s original style and composition. This approach plays directly to Midjourney’s strengths. Its users are already comfortable generating visually rich images through carefully crafted prompts. Now, those same prompts become the basis for moving visuals — no need to learn a new interface or toolset.

Early Experimental Phase

Much like the early releases of tools like Sora and Runway, Midjourney’s video generator will initially launch as a beta — but not for everyone at once. Access will likely begin with select testers or Pro subscribers, following the company’s usual phased rollout strategy.

In this initial stage, users should anticipate 5-10 second clips at around 720p – 1080p resolution. That aligns with early previews from OpenAI’s Sora and Runway’s Gen‑2, which delivered video snippets in that same timeframe and quality. These formats balance manageable file sizes and rendering speeds with visual fidelity — a practical starting point for Midjourney. However, we can expect limitations: variable visual consistency, and no built-in audio, sound design, or in-editor timeline control — at least not initially.

Still, the core mission is ambitious: prove that Midjourney’s signature aesthetic and quality can translate into motion. If that visual fidelity holds up, the experimental phase could quickly evolve into one of the most talked-about creative platforms on the internet.

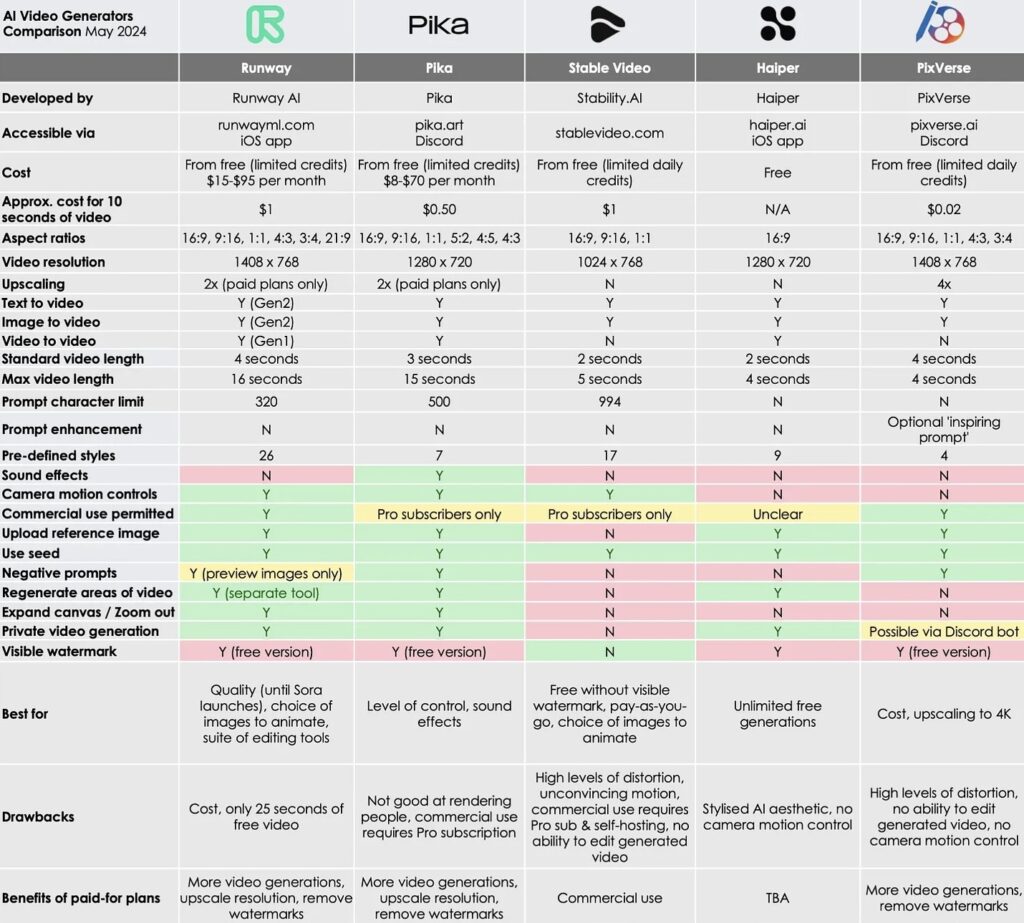

AI Video Generators Comparison [Source]

Video Style Examples

While Midjourney Video hasn’t officially launched yet, early previews from testers and insiders already show off an impressive range of visual styles. From stylized fantasy and paint-like graphics to cinematic scenes and quirky mashups, these short clips hint at the creative potential this new tool will unlock. Below are a few standout examples:

A joyful young woman in full medieval armor takes a selfie with a baby dragon on her shoulder — cinematic lighting and fluid motion bring a fairytale vibe to life.

🔥 Midjourney video is almost here…

— Javi Lopez ⛩️ (@javilopen) June 11, 2025

And it has, somehow, that incredible artistic aesthetic that MJ is famous for.

Yes, these are all MJ video generations!!! 🧵👇 pic.twitter.com/GYCZXmAbNJ

- A beautifully animated short where complex objects (like a mirror reflection or limbs) move, showcasing Midjourney’s signature clean, stylized aesthetic.

- A scene that highlights Midjourney’s sharp polygon-like visuals and fluid motion in stylized cartoon form

2. It's incredible good for cartoons. pic.twitter.com/5gb5YrK721

— Javi Lopez ⛩️ (@javilopen) June 11, 2025

- A whimsical animated scene featuring a glowing cartoon tooth surrounded by colorful musical notes and cheerful dancing characters — showcasing Midjourney’s playful, Pixar-like aesthetic and smooth motion rendering.

- A piece where only small details move — like a character or light reflections — demonstrating precision control and refined motion.

8. And it seems it will be possible to generate videos in which only A LITTLE THING moves. pic.twitter.com/tbH0u0CTam

— Javi Lopez ⛩️ (@javilopen) June 11, 2025

- A grumpy Yoda-like character in a Santa outfit sitting in a wheelchair next to a christmas tree — a surreal, holiday-themed mashup showcasing stylized motion and personality.

- A serene, sunlit living room scene featuring soft shadows, natural textures, and a mounted skull. This showcases Midjourney’s ability to generate lifelike, minimalist interior aesthetics with refined lighting and composition.

16. Interior design. pic.twitter.com/DoOKthHMpp

— Javi Lopez ⛩️ (@javilopen) June 11, 2025

Where This Could Lead In The Big Picture

Midjourney entering the video space isn’t just about adding another checkbox to its feature list — it’s about setting the stage for a much bigger transformation. Let’s explore how generative video could reshape Midjourney’s position in the AI ecosystem and creative industries.

Becoming The Aesthetic Go-To For Video

Midjourney’s biggest strength has always been style and photorealism. Its images often feel more curated, cinematic, and compositionally refined than those of other AI generators. If that same sensibility translates into video, Midjourney could quickly become the preferred tool for creators looking to produce visually stunning short films, visualizers, brand content, or even animation references.

Unlike tools like Runway or Pika, which often struggle with maintaining visual coherence or style, Midjourney already has a strong aesthetic foundation. Adding motion could make it the tool of choice for visual storytellers who care about vibe, not just convenience.

Potential Prompt-to-Video in the Future

While image-to-video is just the beginning, it’s not hard to imagine where this is going. Eventually, Midjourney could offer full prompt-to-video capabilities, letting users describe a scene with a prompt and receive a beautifully animated result — all in the brand’s signature visual style.

This might start with prompt-guided motion (e.g., “a storm rolls in,” “the camera zooms out”) and later evolve into full narrative generation. If Midjourney maintains quality and usability, it could rival even the biggest names in generative video.

Why It Makes Sense for Midjourney to Enter Video Now

The timing of this release isn’t random. Midjourney has waited and observed the first wave of AI video tools — and now, it’s ready to make its own move. Here is why now is the right time for Midjourney to step into the video arena.

The Market Has Matured (Sora, Runway, Veo 3…)

The AI video landscape has entered a new phase of maturity. OpenAI’s Sora stunned the world with its photorealistic, story-driven video generations. Google’s newly revealed Veo 3 followed shortly after, promising even higher resolution, authentic clips, and more advanced motion control — drawing comparisons to professional-grade cinematography. Meanwhile, tools like Runway continue iterating rapidly, with faster generation times and creative control features aimed at everyday creators.

But despite this momentum, many existing tools still struggle when it comes to style. Outputs can often feel generic, sterile, or technically impressive but without soul. And that’s where Midjourney could be different — by focusing not just on motion realism, but on creating video that feels designed, illustrated, and artistically curated.

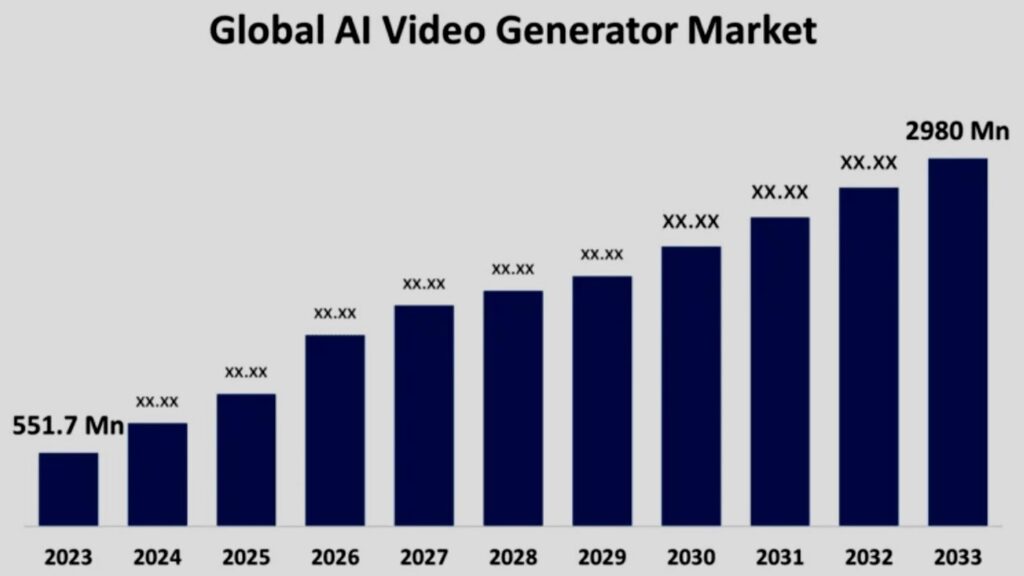

Launching now gives Midjourney the perfect timing: the audience is already primed, the foundational tech has been validated, and yet there’s still a clear gap when it comes to visually distinct, emotionally compelling video generation. And the opportunity is only growing — according to Yahoo Finance, the AI video generation market is expected to grow at an annual rate of 18.37% through the decade, making this not just a creative move, but a highly strategic one.

The Community Wants More

Midjourney’s community is massive, loyal, and hungry. Many users already combine their images with animation tools like EbSynth or Runway to make videos manually — a clear sign of pent-up demand. The subreddit is full of experiments, Discord threads overflow with frame interpolation tips, and creators on YouTube regularly post Midjourney video hacks that rack up thousands of views.

The desire for native video functionality is undeniable. For many users, this next step feels like a natural evolution of the tool they already love. Midjourney isn’t trying to create demand from scratch — it’s simply answering the call.

Global AI Video Generation Market Predicted Growth Graph [Source]

When Will Midjourney Video Be Released?

While the new video feature is already being tested behind the scenes, Midjourney has not set a public launch date yet. What we do know is that development is moving quickly — and the company tends to release major features in bursts once they reach a certain level of internal confidence.

Given how polished early previews look, it’s reasonable to expect a gradual rollout sometime in the second half of this year. Some industry watchers have speculated that we might see a limited preview tied to a Discord-only interface before a full web launch — which would mirror how Midjourney handled earlier alpha tools.

Midjourney’s founder David Holz has hinted at a testboat first wave, but full-web access could follow sooner than expected if user feedback is positive and technical hurdles are minimal. For now, the best clues come from the community itself: increasing leaks, tester reactions, and prototype footage suggest that the wait will be worth it.

Final Thoughts

Midjourney Video isn’t just a feature drop — it’s the beginning of a new chapter for one of the most beloved AI tools in the world.

By combining its signature aesthetic with animation, Midjourney is poised to open entirely new creative workflows. Whether you’re an artist, designer, filmmaker, or just someone who loves dreaming visually, this update could unlock powerful new ways to tell stories.

There are challenges ahead — legal, technical, cultural. But if any company can turn video generation into something breathtaking, intuitive, and creatively liberating, it’s probably the one that redefined how we make images.

The film industry might not feel the impact tomorrow. But soon, it’s going to be impossible to ignore.