In late May 2025, Chinese startup DeepSeek quietly rolled out R1-0528, a beefed-up version of its open-source R1 reasoning model. The upgrade boosts parameter count from 671 billion to 685 billion, adds a lightweight distilled variant, and publishes all weights, training recipes and docs under an MIT license on GitHub and Hugging Face.

🚀 DeepSeek-R1-0528 is here!

— DeepSeek (@deepseek_ai) May 29, 2025

🔹 Improved benchmark performance

🔹 Enhanced front-end capabilities

🔹 Reduced hallucinations

🔹 Supports JSON output & function calling

✅ Try it now: https://t.co/IMbTch8Pii

🔌 No change to API usage — docs here: https://t.co/Qf97ASptDD

🔗… pic.twitter.com/kXCGFg9Z5L

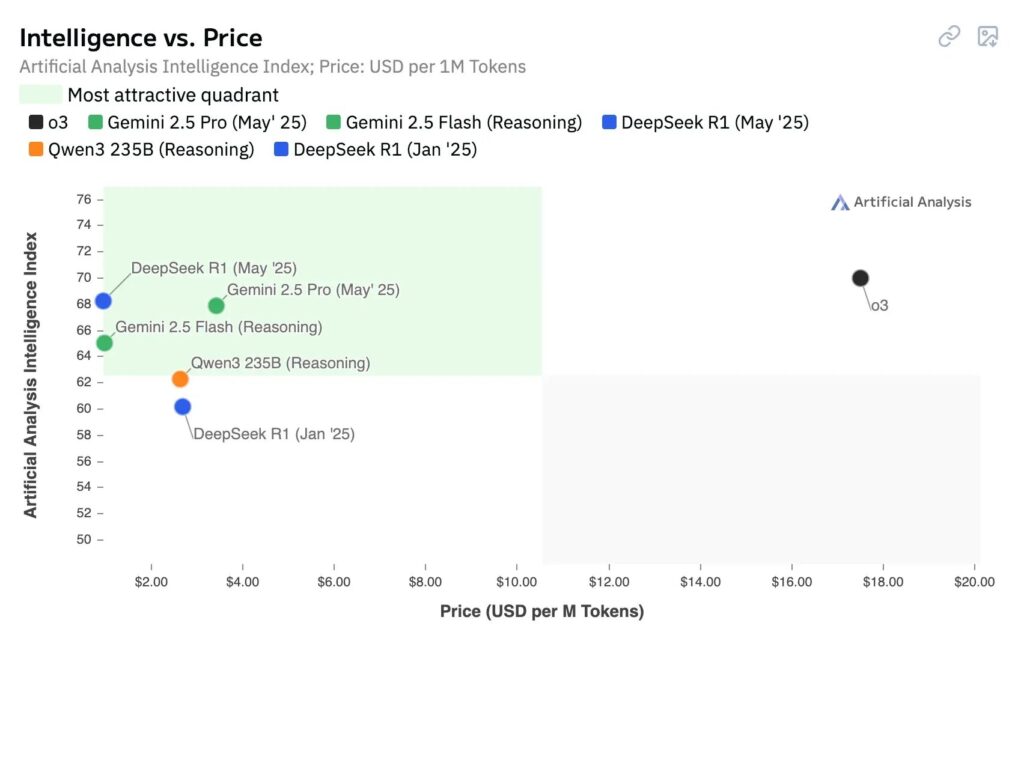

Under the hood, R1-0528 deepens chain-of-thought layers to improve multi-step logic, applies post-training tweaks to curb hallucinations, and slashes latency. On the integration side it introduces JSON outputs, native function calling and simpler system prompts—bringing its performance on math, coding and logic benchmarks within striking distance of OpenAI’s o3 and Google’s Gemini 2.5 Pro, all without per-token fees or usage restrictions.

The Open-Source Advantage

DeepSeek’s flagship R1-0528 is fully open source: its 685 billion parameters, training recipes and docs are published under an MIT license on Hugging Face and GitHub. This level of transparency sharply contrasts with the locked-down model weights and usage restrictions typical of OpenAI and Google.

Researchers gain unfettered access to inspect, audit or fine-tune every aspect of the model—no more guessing how the “black box” works. Startups and enterprises avoid per-token fees or licensing negotiations, empowering them to integrate, modify or extend the model for proprietary or specialized tasks.

Deployment is equally flexible. Organizations with on-prem GPUs can run R1-0528 locally to manage costs, data privacy and latency, while others can spin up DeepSeek’s hosted API for rapid prototyping and seamless scaling. By removing cost and access barriers, DeepSeek accelerates innovation for teams of all sizes.

What’s New in R1-0528

The R1-0528 release brings both core model enhancements and practical integration features. DeepSeek has expanded the model’s reasoning power while adding developer-friendly tools to streamline real-world deployment.

Core Model Improvements

DeepSeek sharpened R1’s inference abilities with three key changes:

- Parameter increase: the model grows from 671 billion to 685 billion parameters, allowing it to store and manipulate more nuanced patterns.

- Enhanced chain-of-thought layers: deeper reasoning circuits help the model work through multi-step logic problems more reliably.

- Post-training optimizations: new algorithmic tweaks reduce hallucinations (incorrect or fabricated outputs) and boost output stability under load.

Developer-Friendly Enhancements

On the integration side, R1-0528 adds features aimed at making application building faster and more robust:

- JSON output & function calling: responses can now be returned as structured data or directly invoke external functions, simplifying downstream parsing and automation.

- Simplified system prompts: you no longer need a special “thinking” token to trigger the model’s reasoning mode, which makes custom prompt design cleaner.

- Performance tweaks: reduced inference latency and smoother front-end interactions—especially noticeable in web UIs—mean a more responsive user experience.

Performance Benchmarks

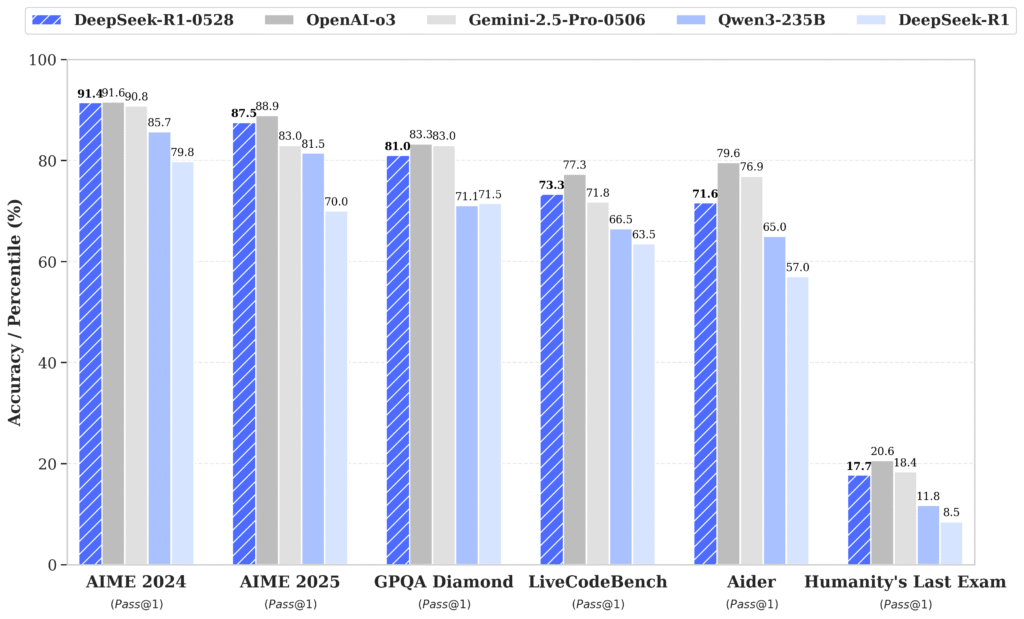

In a suite of internal tests, R1-0528 delivers markedly better reasoning and coding outcomes, narrowing the lead held by closed-source rivals. Across math, programming and complex logic challenges, the updated model shows its ability to follow multi-step solutions more reliably and generate correct code more often.

Key improvements include:

- AIME 2025 (math reasoning): accuracy climbs from 70% to 87.5%, reflecting deeper step-by-step problem solving.

- LiveCodeBench (coding): correctness jumps from 63.5% to 73.3%, producing cleaner, runnable code on the first attempt more frequently.

- “Humanity’s Last Exam” (open-ended logic): success rates double from 8.5% to 17.7%, highlighting stronger complex inference.

These gains place R1-0528 within striking distance of OpenAI’s o3 and Google’s Gemini 2.5 Pro on the same benchmarks. While the proprietary models still leverage larger ecosystems, fine-tuned datasets and specialized deployment stacks, DeepSeek’s open-source release delivers competitive performance without the need for restrictive licenses or paywalls.

Community Reception

Since its release, R1-0528 has generated a wave of enthusiasm in developer circles. One AI engineer praised the model for “incredible coding—clean code and passing tests on the first attempt.” Others have noted that R1-0528 is now flirting with the performance of today’s “king” models, fueling speculation that DeepSeek’s next R2 iteration could raise the bar even further.

This positive feedback isn’t limited to code generation. Across AI forums and social media, users applaud the model’s improved reliability, its transparent open-source license and the freedom to tinker without legal or financial constraints. Early adopters report faster prototyping cycles and fewer surprise behaviors—proof that public weights and permissive licensing resonate with today’s innovation-driven teams.

Looking Ahead

With R1-0528 firmly establishing DeepSeek as an open-source leader, the company’s roadmap has never been more important. Reducing remaining hallucinations and bias remains a top priority, and you can expect ongoing algorithmic tweaks to sharpen accuracy. At the same time, broader third-party integrations—especially via JSON outputs and function calls—will unlock new use cases in enterprise software, robotics and beyond.

Of course, the prospect of a true next-generation “R2” model looms large. Whether that release brings even larger parameter counts, specialized domain versions or novel architectural breakthroughs, the community is watching closely. Equally crucial will be how regulators in the U.S., China and elsewhere choose to approach open-source AI—rules that could shape both access and innovation for years to come.

As AI continues to transform business and society, DeepSeek’s journey shows that high performance, openness and affordability can advance together. R1-0528 is a milestone, but it’s also a springboard for the next wave of collaborative, transparent AI development.